Ever found yourself neck-deep in server configurations when you should be writing code? Or maybe you've been jolted awake at 3 AM by alerts about a misbehaving virtual machine? Welcome to the club that nobody wanted to join: the "infrastructure management takes over my life" club. It's that part-time job nobody signs up for but somehow comes bundled with your developer or IT role.

But what if there was a way to shift your focus almost entirely to writing code while someone else handles the server provisioning, scaling, and maintenance? That's the promise of serverless computing. An approach that, despite its somewhat misleading name, is changing how we build and deploy applications to the cloud.

What Is serverless computing, really?

Serverless computing is a cloud execution model where you focus on writing code while the cloud provider handles all the infrastructure concerns. Despite the name, servers still exist. You just don't see, manage, or pay for them when they're idle.

The key principles that make serverless different include:

No server management: The cloud provider handles provisioning, scaling, patching, and maintenance.

Event-driven execution: Your code runs only in response to specific triggers, not continuously.

Pay-per-execution pricing: You're charged only for the compute time you actually use, often billed in milliseconds.

Function-based development: Applications are broken down into small, single-purpose functions.

Automatic scaling: The platform scales from zero to thousands of concurrent executions without configuration.

The most common implementation is Function as a Service (FaaS) platforms like AWS Lambda, Azure Functions, and Google Cloud Functions. These services let you deploy individual functions that run in response to events without worrying about the underlying infrastructure.

Why developers are embracing serverless

Focus on business logic, not servers

The biggest win with serverless is the ability to concentrate on writing code that delivers value rather than maintaining infrastructure. When you're not spending time configuring load balancers, setting up autoscaling groups, or applying security patches, you can focus on what really matters: building features your users need.

One developer I know put it perfectly: "Serverless didn't just save us money, it saved our sanity. Our small team went from spending 40% of our time on infrastructure to less than 5%."

Pay only for what you use

Traditional server deployments require you to provision for peak capacity, meaning you're paying for idle resources most of the time. With serverless, you pay only when your code executes. No usage, no charges.

For applications with variable or unpredictable workloads, this can lead to significant cost savings. One startup I worked with reduced their infrastructure costs by 78% after moving their batch processing jobs from dedicated EC2 instances to serverless functions.

Scale without breaking a sweat

Serverless platforms handle scaling automatically. Whether your application receives one request per hour or a thousand requests per second, the platform provisions the necessary resources on demand.

This automatic scaling requires no configuration or monitoring, making it ideal for applications with unpredictable or spiky traffic patterns. Your code can handle a sudden traffic surge without you having to scramble to add more capacity.

Ship faster, iterate quicker

With infrastructure concerns handled by the cloud provider, development teams can move more quickly from idea to production. The reduced operational overhead means faster iteration cycles and shorter release timelines.

One team I consulted with cut their release cycle from weeks to days after adopting serverless. They could focus on feature development instead of infrastructure planning meetings.

Real-world serverless use cases

API backends that scale on demand

Serverless is particularly well-suited for building API backends. Each API endpoint can be implemented as a separate function that processes requests and returns responses, scaling independently based on demand.

Real-world example: A retail company built a serverless API to handle product catalog queries. During normal operations, the API handles modest traffic, but during flash sales, traffic increases by 20x. With serverless, the API scales automatically to handle these spikes without any manual intervention or over-provisioning. Better yet, they only pay for the actual requests processed, not for idle capacity during slower periods.

Real-time data processing without the infrastructure

Serverless functions can be triggered by events such as new data arriving in a storage bucket or message queue. This makes them perfect for real-time data processing workflows like image optimization, log analysis, or IoT data handling.

Real-world example: A media company uses serverless functions to process uploaded images. When a user uploads an image, a function is triggered that generates multiple resized versions for different devices, optimizes them for web delivery, and stores them in the appropriate locations. The entire process happens automatically and scales to handle any number of concurrent uploads without managing a single server.

Scheduled tasks that don't need dedicated servers

Instead of maintaining dedicated servers for periodic tasks, serverless functions can be scheduled to run at specific intervals. This is perfect for maintenance jobs, data aggregation, or any task that needs to execute on a regular schedule.

Real-world example: A financial services company uses serverless functions for end-of-day processing. Every evening, functions run to aggregate transaction data, generate reports, and prepare the system for the next business day. These functions only run when needed, eliminating the cost of maintaining always-on servers for this periodic workload.

Challenges you'll actually face with serverless

While serverless offers many benefits, it's not without challenges:

Cold starts and performance considerations

When a function hasn't been used recently, the platform may need to initialize a new container to run it, causing a delay known as a "cold start." This latency can be problematic for user-facing applications where response time is critical.

Cold start delays can be more pronounced with serverless compared to containers, particularly for functions written in languages with longer initialization times like Java or C#. For latency-sensitive applications, you'll need strategies to mitigate cold starts, such as keeping functions "warm" or using provisioned concurrency options.

Debugging distributed architectures

Distributed serverless architectures can be more difficult to debug than traditional monolithic applications. Tracing requests across multiple functions and services requires specialized tools and approaches.

Since serverless apps are made up of multiple functions and may include other event sources like queues or storage triggers, you'll need comprehensive logging, monitoring, and tracing from day one to help troubleshoot issues when they arise.

Vendor lock-in concerns

Each cloud provider's serverless offering has its own APIs, features, and limitations. Building extensively on one provider's serverless platform can make it challenging to migrate to another provider later.

While the core function code may be portable, your application will likely use provider-specific services or APIs that aren't easily replicated elsewhere. Consider this factor when architecting your serverless applications.

State management challenges

Serverless functions are stateless by design. The containers on which they run can be shut down at any time, meaning you can't rely on in-memory state between executions.

Any persistent state needs to be stored outside the function in databases, caches, or other storage services. This forces a different approach to architecture that can be challenging for teams accustomed to stateful applications.

Getting started with serverless computing

If you're ready to dip your toes into serverless waters, here are some practical steps:

1. Choose a cloud provider: AWS, Azure, and Google Cloud all offer mature serverless platforms with different strengths and pricing models. Pick one that aligns with your existing cloud strategy or skills.

2. Start small: Begin with a simple, non-critical function to understand the development workflow and deployment process. Webhook handlers, simple APIs, or scheduled jobs make good candidates for your first serverless project.

3. Think event-driven: Design your functions around specific triggers and events rather than continuous processes. Break down your application into small, focused functions that each do one thing well.

4. Plan for observability: Implement comprehensive logging and monitoring from the beginning to help with debugging and performance optimization. This is especially crucial in distributed serverless architectures.

5. Consider cold starts: For latency-sensitive applications, implement strategies to mitigate cold start issues, such as keeping functions warm or using provisioned concurrency options on platforms that support it.

FAQ: What you really want to know about serverless

How does serverless differ from traditional cloud computing models?

Traditional cloud computing typically involves provisioning virtual machines or containers that run continuously, regardless of whether they're processing requests. You're responsible for scaling these resources up or down based on demand.

In contrast, serverless computing handles all of this automatically. Your code only runs when needed, and the platform manages all aspects of provisioning, scaling, and maintenance. This shifts the operational burden from your team to the cloud provider, allowing you to focus more on application development.

What types of applications are best suited for serverless?

Serverless works best for:

- Event-driven workloads

- Microservices with clear boundaries

- Applications with variable or unpredictable traffic

- Tasks that can complete within the timeout limits of the platform (typically under 15 minutes)

Applications that are based on short-lived functions that can complete their work within platform timeout limits will work well. Serverless may not be ideal for workloads that require long-running processes, have special hardware requirements, need guaranteed resources, or have other dependencies.

How does serverless pricing work, and is it always cheaper?

Serverless pricing is typically based on three factors:

- Number of requests/invocations

- Execution time (usually billed in increments of 100ms or less)

- Memory allocation to functions

For workloads with intermittent traffic or idle periods, serverless can be significantly more cost-effective than paying for continuously running servers. However, for high-volume, consistent workloads, the per-invocation costs can add up, potentially making traditional deployment models more economical.

The key is to analyze your specific usage patterns and do the math. Many organizations find that certain parts of their application are ideal for serverless while others make more sense on traditional infrastructure.

The future is serverless (but not 100% serverless)

Serverless computing represents a significant evolution in how we build and deploy applications. By abstracting away infrastructure management, it allows developers to focus on writing code that delivers business value rather than wrestling with servers.

The changes serverless brings to application development will continue to disrupt traditional architectures as cloud providers innovate and enhance their FaaS and related services. For workloads that are a good fit, serverless can dramatically simplify development, reduce management overhead, and help organizations scale more quickly while optimizing costs.

The future of application development is likely a hybrid approach that leverages serverless for appropriate workloads while using containers or virtual machines for others. The key is understanding the strengths and limitations of each model and choosing the right tool for each job.

Serverless isn't a silver bullet for all use cases and won't completely replace other models, but it clearly has its place. And that place is growing. For teams looking to optimize their infrastructure, reduce operational overhead, and accelerate development cycles, serverless computing offers a compelling path forward.

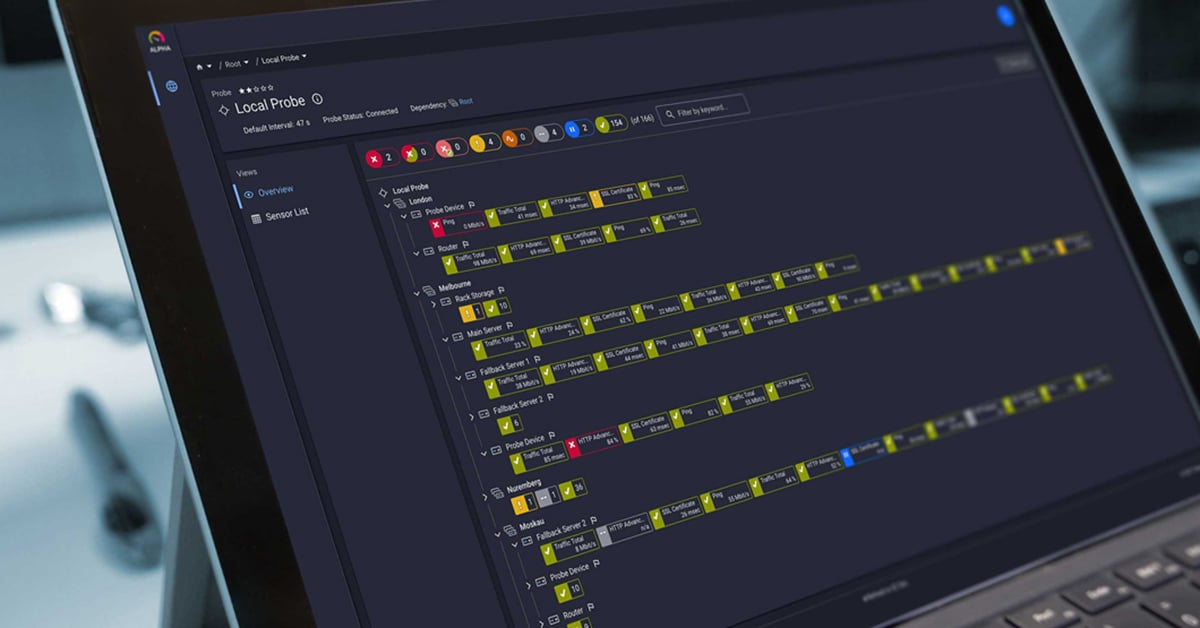

For organizations embracing serverless, maintaining visibility into function performance and reliability is crucial.

PRTG Network Monitor provides comprehensive monitoring capabilities that extend to your serverless functions, giving you the insights you need to ensure everything runs smoothly. Try PRTG Network Monitor free for 30 days and discover how it can help you manage your entire IT environment, including your serverless applications.

Suggested Links:

Published by

Published by