To get a perspective on any current hype—which right now includes Artificial Intelligence and the Internet of Things—it helps to take a look at the hyped topics of the past and ask ourselves: what was promised? What actually came out of it? And was it actually useful? Cloud computing is a good place to start, since it has been through the hype cycle and is now approaching the slope of enlightenment (or at least it is according to Gartner). Let's take a look at the current reality of the cloud, how it's changed the game, where it's going, and how we should be thinking about monitoring it.

Booming Business

After going through the hype cycle, it has become obvious that the cloud is very much a real thing, and is generating massive revenue. According to a recent Gartner article on the cloud shift, IT spending on cloud system infrastructure services will grow by over 23 billion US dollars by 2021. And one thing is clear: it doesn't matter who went in first, which seems to matter to many service providers in every hyped technology (I'm looking at you, 5G!). Instead, the big players like Amazon and Microsoft seem to all have a decent piece of the pie (lesson for the future, 5G service providers ).

And the technology has changed the way the business world thinks, too. It's no longer a question of "Should we move to the cloud?", but rather "Which cloud?". This doesn't only mean which cloud service provider to choose, but also: public cloud or private cloud. We're now seeing a migration of some services—estimated at 5% in fact—from the public cloud back to the private cloud, and it is probably because those services should not have been in the public cloud in the first place.

The Cloud Is Not Your Destination

Another thing that has become clear is that the cloud is not a destination, as it might have seemed at the height of the hype, but rather a means of operation. In the midst of the hype, it seemed like you either had to stay on-premises, or you had to adopt the cloud and move everything there. However, it's now clear that this was something of a false dichotomy: it's certainly not a case of "one or the other".

The question that many companies and organizations are now asking is not, "How do I get into the cloud?" but rather, "How can the cloud support my operations?" This is an important shift that changes how solutions are designed and built. It means taking a close look at all the services required, and then deciding which of these can be run in a public cloud, which can be run in a private cloud, and which should be run on traditional architecture on premises. Criteria such as the scalability required from services, middleware for movable parts, and security all play a role in deciding which elements are placed in the cloud.

The Hybrid Environment

When certain elements and services of the infrastructure land up in the cloud, and others remain on-premises, you end up with a hybrid infrastructure. And this is exactly the reality for the majority of modern-day IT infrastructures. We covered this in a discussion by our very own Greg Campion in a great video about the future of IT infrastructure:

Basically: on-premises is not going away. Many enterprises that require data locally for production tasks want to have it stored locally, perhaps due to bandwidth concerns or simply because they don't want to put their production data in a public cloud. For financial institutions, the data is extremely sensitive and is kept on-premises due to security concerns. And while a lot of the data processing is local in these cases, many of these enterprises also have some data that ends up in the cloud for analysis—after it has been anonymized, of course.

All of this results in modern hybrid environments that are increasingly complex: they have services and data in the public cloud, some other services and data in private clouds, and usually orchestration tools connecting it all together. One of the biggest contributors to the complexity is the fact that the network will continuously be changing. As cloud service providers change existing functionality or introduce new services, enterprises will need to revise their own architecture and infrastructure to keep up-to-date with the changes.

This new complexity has an effect on fixing outages and problems: the IT admin needs to know where the problem is.

Monitoring Trends

Because of the current landscape of varied environments and hybrid infrastructure, monitoring also needs to adapt. The monitoring principles that have stood for several decades are no longer sufficient (although they're still valid for traditional infrastructure). To understand exactly how monitoring needs to adapt, we first need to consider how the current IT situation changes how we monitor.

Firstly, traditional monitoring principles still apply to traditional on-premises networks and hardware, like routers, switches, servers, and so on. No changes there. Secondly, it's possible to monitor services running in a public cloud using the tools and metrics provided by the service provider. So far it all sounds fairly straightforward. But the truth is that environments contain disparate technologies and probably have services running in multiple clouds. This brings a level of complexity for monitoring.

So how can we meet the new demands and requirements for monitoring? Here are some of the ways that monitoring needs to change to cater for the present situation.

Monitoring the Cloud

Since cloud service providers already provide monitoring of their own, network monitoring software does not need to focus on these services. Rather, it is up to the IT professionals to decide what metrics have the most value and find a way to put them in some kind of context (see the "One View" section below). Key here is to avoid vendor log-in, because otherwise you might be logging in to several service providers to get data.

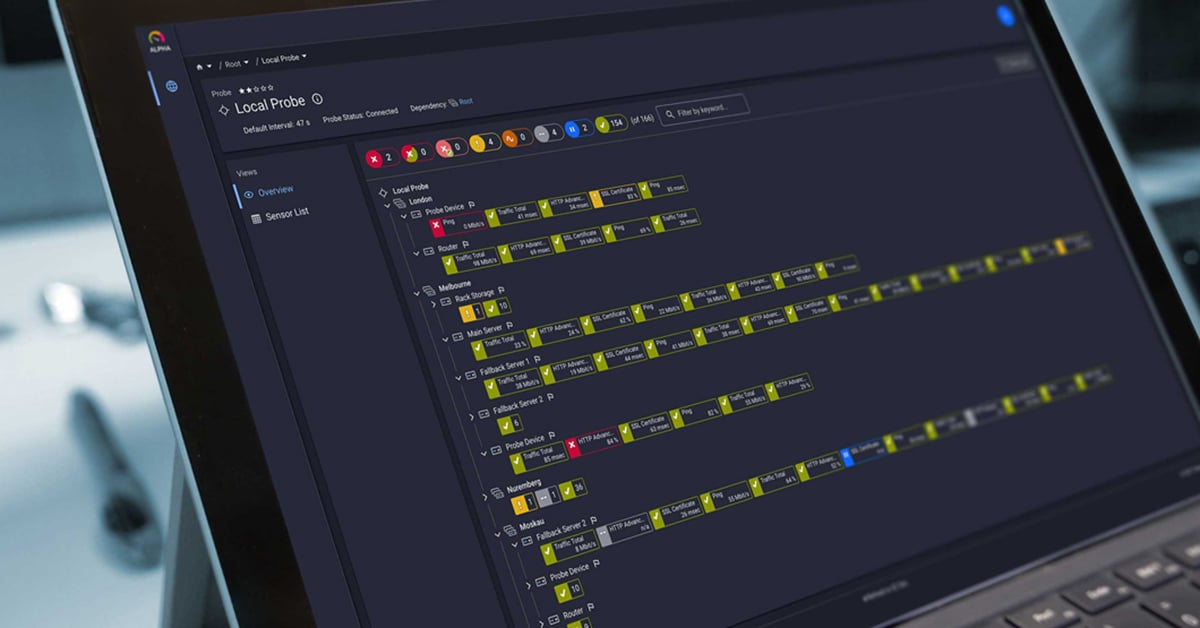

A Single Dashboard

![]() With metrics coming from several different sources, the strategy should be building a single view of all the vital aspects of the infrastructure. This means gathering data and aggregating it, and displaying it on a single dashboard to show a unified view of the entire infrastructure. The art of this approach will be not to produce a 1-to-1 view of the infrastructure, but rather only to collect the most important metrics in one place.

With metrics coming from several different sources, the strategy should be building a single view of all the vital aspects of the infrastructure. This means gathering data and aggregating it, and displaying it on a single dashboard to show a unified view of the entire infrastructure. The art of this approach will be not to produce a 1-to-1 view of the infrastructure, but rather only to collect the most important metrics in one place.

Move Towards REST API

Historically, SNMP was the go-to protocol for monitoring devices and hardware because it was ubiquitous; even to this day, just about every piece of IT hardware manufactured is accessible using SNMP. However, with the advent of cloud services and IoT devices, it will no longer be the de facto standard in the future.

Rather, the move needs to be towards REST API for various reasons. For one, services running in the cloud can be queried using it. For another, more and more hardware manufacturers are providing a REST API that can be used to get data about the device's health and functioning. And using REST API for hardware and service monitoring has a benefit: it's possible to bring the data into the unified view I mentioned in the last point.

Clarity After the Hype

Now that the hype around the cloud seems to be settling down, we have some more clarity about what it can and can't do, and how to best utilize its benefits. And with that clarity comes a better understanding of how to monitor it. The adoption of the cloud and integration of it into current IT infrastructure will increase over the next few years, and it will become more important to find ways of bringing disparate technologies, platforms and services into a single view.

Published by

Published by