Have you ever been jolted awake at 3 AM by numerous alerts that turned out to be mostly false positives? The worst scenario is when a critical system shuts down unexpectedly without any prior notifications. I've experienced both scenarios and neither situation brings any enjoyment. True effective monitoring requires understanding key metrics and establishing intelligent alert thresholds in order to identify important alerts and respond quickly to system outages.

Implementing effective monitoring across critical systems

The foundation of a successful monitoring strategy requires a systematic method to determine the most important elements within your IT infrastructure. Map business services to their technical components first before determining priorities according to their potential effects on service levels. Choose meaningful metrics for each critical system that deliver actionable alerts - track error rates, response time, latency, and transaction throughput instead of just CPU and memory usage. These indicators support application monitoring best practices because they deliver early warning signals before users encounter problems.

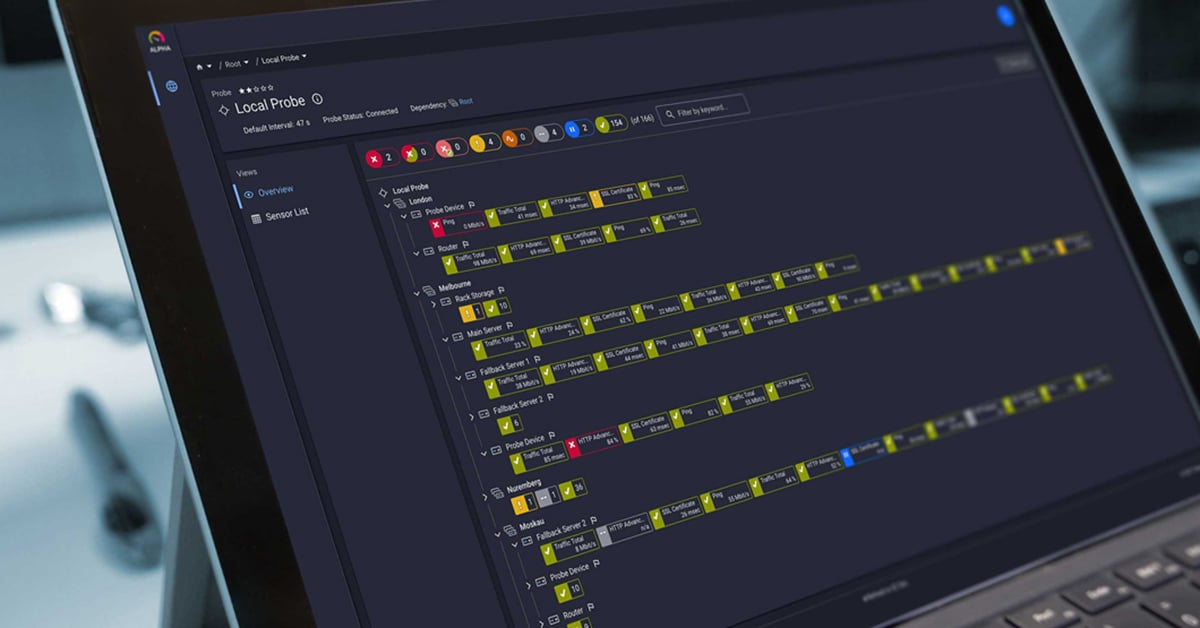

Monitoring solutions capable of complete end-to-end coverage must integrate across your entire technology stack which includes on-premises systems along with cloud services and hybrid architectures. Your monitoring system needs to deliver complete observability across the entire IT infrastructure including specialized elements such as monitoring syslog facilities with PRTG Network Monitor for network devices. Create baseline performance benchmarks to identify irregular patterns and set up dashboards that display overall system health across various environments in real-time.

This monitoring strategy keeps your infrastructure observable while it adapts to new technological developments such as containerization and Kubernetes orchestration.

Set up alerts designed to reduce unnecessary notifications while enhancing response times

The challenge of setting proper alert thresholds becomes clear when you face multiple false alarms in the middle of the night. The alerting system needs precise calibration because high sensitivity results in excessive notifications and alert fatigue (and coffee consumption) while low sensitivity risks missing vital issues until users complain. Through extensive trial and error, I discovered that the monitoring and alerting techniques from these infrastructure best practices guides deliver dependable results under high-stress conditions.

The most effective teams I have worked alongside all implement some variation of a tiered alert system with clear escalation workflows. Nothing fancy - just categorizing monitoring alerts by urgency: We had 1,200 FYI alerts that were simply logged last month along with warning alerts that are deferred until morning and critical issues that demand immediate response.

Redundancy becomes essential during true emergencies such as unexpected 503 errors from your payment processing API. It's important to ensure on-call staff receive critical alerts through more than one communication channel including email (which frequently goes unnoticed), SMS (more reliable), and integration with PagerDuty or OpsGenie (most effective). Overkill? The necessity for redundant notifications becomes apparent when your primary notification method fails during a major outage.

Alert correlation enables fast root cause identification and minimizes notification overload. A single root cause often triggers multiple related alerts simultaneously. With PRTG Network Monitor, related alerts are automatically combined into one incident instead of generating multiple separate notifications for responders. Teams can effectively reduce mean time to resolution (MTTR) since this capability enables them to concentrate on root causes instead of symptoms. Use dependency mapping to identify component relationships which allows for more effective alert correlation and secondary alert suppression.

Consistent updates to your alerting configuration lead to high-quality alerting performance and monitoring results. Analysis of alert patterns shows that frequent false positives reveal threshold adjustments while missed incidents uncover monitoring gaps. Implement automation to resolve standard problems independently including restarting services when predefined conditions arise. Create a feedback mechanism that incorporates alert effectiveness assessments during incident post-mortems to enable ongoing improvements in your alerting strategy.

Conclusion

Here's the thing about monitoring and alerting - you can't just set it up once and walk away. God, I wish it were that easy! It's more like gardening - constant pruning and adjusting as things grow and change. Remember that Friday calendar thing I mentioned? Yeah, actually blocking time to review your alert thresholds is crucial - otherwise months go by and suddenly you're drowning in useless notifications. You gotta track the tech metrics (MTTR and all that jazz), but honestly, the business numbers like uptime are what'll save your budget next year. And please, for the love of sleep, try to get your team thinking ahead of problems instead of just reacting to alerts all day. Trust me, prevention is way less stressful.

Implementing these monitoring and alerting best practices isn't a one-time project - it's an ongoing process of refinement. Start by establishing regular reviews of your alerts and thresholds (that Friday afternoon calendar block I mentioned), then measure what matters with both technical metrics like MTTR and business KPIs like uptime percentages, and finally, shift your team's mindset from reactive firefighting to proactive prevention.

The companies that get this right don't just have more reliable systems - they have happier customers (who can actually complete their purchases during peak times), less stressed teams (who can enjoy their weekends), and better business results (like that $2M in saved revenue I mentioned).

If you're tired of 3 AM alerts and want to see how much easier life can be with the right monitoring solution, get a free trial of PRTG Network Monitor and start putting these practices to work today.

Frequently Asked Questions

OK, but seriously - how the heck do I figure out which metrics actually matter for my setup?

Man, I wish there was a simple answer to this one! It really depends on what your business actually does. Like, if you're running an online store, you'd better be watching those checkout flows like your life depends on it (because your revenue literally does). I worked with a retailer who lost $50K in sales before they realized their payment processing API was timing out during peak hours. For SaaS folks, it's all about those API response times and error rates - users bail when things get sluggish.

BTW, if you're trying to get more visibility into what's happening under the hood, check out this guide on monitoring syslog facilities with PRTG Network Monitor. Helped me catch some weird network issues last month that would've been impossible to track down otherwise.

My team is ignoring alerts because there are too many. How do I fix this without missing something important?

Oh man, the classic "boy who cried wolf" problem! I've totally been there. My phone used to buzz so much with false alarms that I started ignoring it during dinner. Not great when something actually broke. What worked for me was a three-step cleanup: First, we categorized alerts by actual impact (not what the vendor claimed was "critical"). Second, we audited six months of alert history and killed anything that never led to action. Third - and this was key - we set up better correlation to group related alerts instead of getting 15 notifications for one problem.

If you're drowning in alerts, you might want to look into monitoring syslog facilities with PRTG Network Monitor for better filtering and correlation. Saved us from alert overload when our network expanded last year.

I keep hearing about monitoring "best practices," but what's the worst mistake you see people making?

The worst? Probably when companies drop a small fortune on fancy monitoring tools and then just… leave all the settings on default. I inherited a system once with literally 3,000 active alerts configured. THREE THOUSAND! No wonder the previous admin quit. The hard truth is that monitoring isn't a "set it up and forget it" thing - it's more like a garden that needs regular weeding. Your business changes, your infrastructure evolves, and yesterday's perfect threshold becomes today's noise generator. I now make my team review our alerts quarterly, and we're ruthless about killing off anything that hasn't been useful. Get feedback from whoever's getting woken up at 2 AM - they'll tell you which alerts are garbage.

Published by

Published by