Virtualization makes everything in the data center much more straightforward: your systems will become significantly more scalable and flexible when compared with native systems. What are the typical obstacles that are encountered, and what are 2017's trends?

1. Focus on the Facts: Is Virtualization Worth It for You?

As ever, when it comes to virtualization projects, the first step is to put together a solid requirements analysis. The setup is the crucial factor in all of this: what does your current hardware load look like? Virtualization is helpful when you have a solid overview of your resources. How should a virtualized setup be configured in order to ensure that your requirements are fully covered? Only once you have accurately assessed your usage in terms of network, storage and CPU capacity does it become meaningful to discuss migrating to a virtualized environment. Load tests with appropriate monitoring are helpful in this respect.

This is because virtualization quickly becomes problematic if you do not assess your requirements correctly. Too few virtual machines on your hardware will defeat your best intentions completely - or, conversely, there can be performance issues if your hardware is overburdened by unrealistic virtualization requirements. The basic principle is that I/O intensive tasks are generally not well suited for virtualization. Emulated hardware quickly buckles under the strain of these tasks, when multiple virtual machines frequently and simultaneously attempt to access the same physical hardware.

iVirtualization is the process of creating a virtual version of something like computer hardware. It involves using specialized software to create a virtual or software-created version of a computing resource rather than the actual version of the same resource. Read more ...

Plan realistically: how many physical processor cores must each VM be able to use? Does the storage site offer high enough performance to act as shared storage for multiple virtual machines? Don't estimate - measure!

2. Get a Broad Perspective on Virtualization

Once you have analyzed your hardware requirements, it's time to move on to step two: identifying the applications that are best suited for virtualized environments. Applications that place significant loads on processors or cause vast amounts of read/write activity are mostly unsuitable for virtualized systems. The results can look similar when you run hardware expansions through the server: in many instances, expansion cards or dongles do not work in virtualized systems.

Another aspect to take into consideration is whether your software is licensed for virtualization. That means that your current software license may not support virtualized clients. It may also be the case that your supplier will not offer any support for use on VMs, because they are officially unsupported, or only supported on a small number of defined platforms (such as the vendor's own).

3. Understand Your Network Status Precisely

If you have already virtualized your environment, your aim should be to ensure that you have a comprehensive overview of everything that's happening. Networks in virtualized environments are highly dependent on how efficiently hypervisors, VMs and hardware work together. This is something that you need to keep a close eye on. Where are your network's bottlenecks, and have you specified and configured the hardware appropriately for your virtualization? With smart network monitoring, you can recognize possible problems as they develop. If you monitor host servers, operating systems and VMS individually, you'll quickly identify any errors and unexpected overloads that would otherwise ruin your performance.

4. Recognize Traffic Patterns Early On

Hardware outages and load peaks are catastrophic for virtual networks in particular. When computing notes, storage units or switches go down, multiple VMs and the apps that they run are quickly affected. Even if the hardware itself is running, but unforeseen network or performance spikes occur, VMs are particularly hard hit, causing them to break down.

When this happens, it's important to be intimately familiar with your network traffic and, with the help of analytical tools, to learn to recognize patterns in your traffic. As far as possible, this simplifies the process of scaling resources in line with requirements and to balance load peaks before they occur. Keep all your services online by understanding their traffic down to the last detail. When do peaks occur? Which services and hosts are particularly affected by these peaks? This knowledge helps you to get an overall understanding of your infrastructure in general. If the network is regularly overloaded, it's time to consider removing these systems from the virtualized environment.

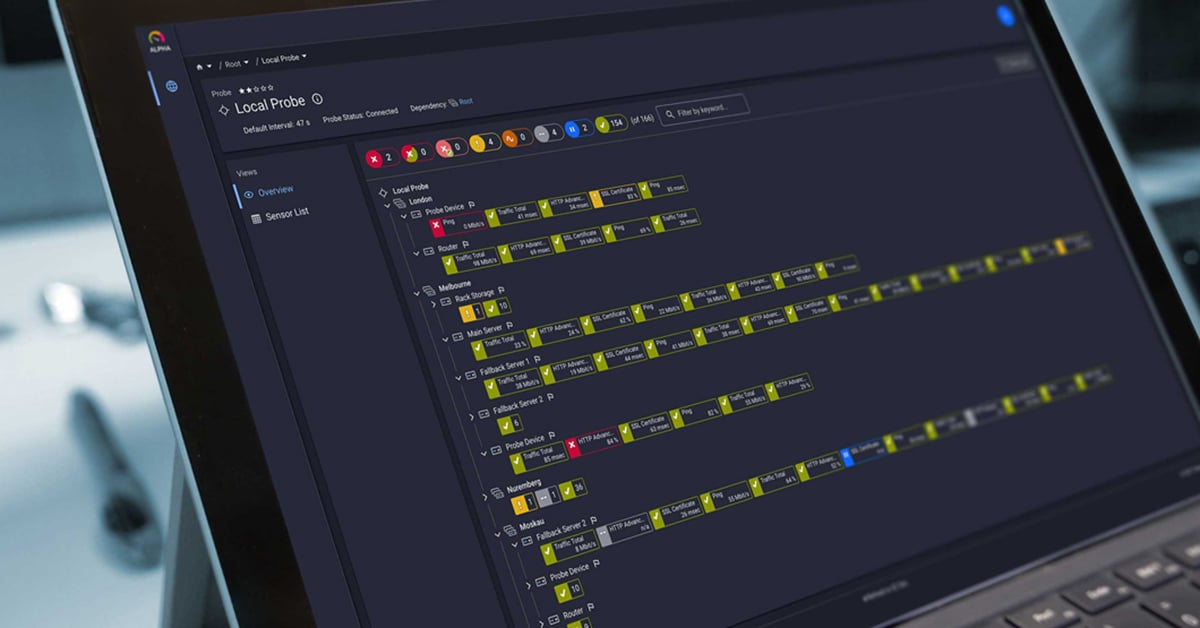

5. Unified Monitoring for Virtualized Systems

If you've migrated your applications to VMs, it's important to integrate these completely with your unified monitoring. Virtualized systems must be monitored at least as closely and comprehensively as native hardware. In principle, they should be watched significantly more closely as there are more potential bottlenecks, and you can only take advantage of the benefits offered by scalability if you are sufficiently familiar with the loads that are imposed.

Comprehensive unified monitoring makes it possible for you to monitor individual load demands, including network, memory, and operating system demands, all from a single tool. This means that you can keep an eye on both virtual resources and physical hardware.

6. Reduce Complexity with Convergent Systems

Modern hypervisors and smart cloud software mean that on-premises systems, private and public cloud infrastructure can now be rolled out and monitored as a cohesive whole. These "converged systems" are more closely aligned with physical hardware than traditional virtualized systems, thereby allowing highly scalable, hybrid architectures and maximizing levels of integration. By using standardized hardware and appropriate systems administration techniques, it's possible to achieve an IT environment that optimizes compatibility and integration.

New hardware is automatically provisioned and added to the processing or storage pool. This is the opposite of traditional, manual configuration. The biggest disadvantage, however, is that you are locked in to a particular vendor, as all components and software come from a single provider.

7. Compliance or Performance?

The requirements placed on modern IT continue to grow in complexity. On the one hand, infrastructure, its performance, and installed apps must become more agile and scalable than ever before. On the other hand, legal and regulatory requirements are becoming increasingly restrictive, and with them, the impact of compliance standards and security requirements increases. These two trends, and the requirements that they generate, are often contradictory, creating a classic conflict of interest that requires careful planning and infrastructure monitoring.

Work with your legal or compliance teams to identify which systems and data are business critical and vulnerable to the extent that they can only be run in a separate environment from other systems, behind their own firewall. These services or data won't move to the cloud within the foreseeable future, not even as a private cloud. If the compliance requirements are particularly strict, there is no benefit in assuming risk and working with virtualized hardware and shared storage only to create uncertainty as to the physical location where critical data is stored.

8. Let Software Take Control: SDN

Topics such as Software Defined Storage (SDS) and Software Defined Computing (SDC) have been part of the debate for a long time and are already widely used in practice. This form of hardware management has the advantage that cheaper, off-the-shelf products can be controlled and allocated by a software layer, with potential benefits in terms of flexibility and cos reduction. Simultaneously, this approach frequently fulfills the needs of enterprise departments that seek greater agility to support their projects and systems.

SDS and SDC have now been joined by SDN, in the form of Software Defined Networking. The same basic principle applies, namely that the control system is decoupled from the hardware. This makes it possible to construct the network hardware with simpler components, creating the complex control layer in software, at least in theory. Currently, this trend is being held up by differing approaches adopted by various manufacturers, and the fact that, in most cases, existing network hardware will not be suitable for SDN, making the potential savings an even remoter prospect.

Published by

Published by

.jpg)