3 AM (aka the 'witching hour'). Something broke. You've got dozens of open tabs with server log files that you are desperately trying to make sense of. We've all been there - you're scanning through thousands of timestamped entries, frantically looking for that one anomalous line that can tell you why your application went down, the server lost connection, or why all your users suddenly can't log in.

Server log files are supposed to make our lives easier, not harder. Yet without effective log monitoring in place, they're just unwanted noise. The real challenge isn't collecting more logs (we're already drowning in log data). The challenge is building a system that can tell you what's going on in - and in real time.

Time is of the essence when troubleshooting and finding the the fix is a race against the clock. You don't want to have to pore through massive log files or wait days for reports when you need to react now. That means real-time monitoring, smart aggregation, and dashboards that make it easy for you to see what's actually going on at a glance.

What Server Log Monitoring Actually Does

In its most basic form, log monitoring means watching what's going on. Application logs, event logs, syslog messages, firewall spew, all of these generate log files that contain performance metrics, security events, error codes, and all the contextual information you need to root cause when something goes wrong.

Log data is everywhere, and log sources are varied. Windows servers generate event logs. Linux machines pump out syslog messages. Applications write to whatever log format the developer decided on at the time. Infrastructures run on network devices like firewalls and switches that spit out log messages too. Cloud resources from AWS and Azure and Google also have their own super special ways of recording logs. It can all be pretty overwhelming!

So how do you cope? Good server log monitoring takes all of these scattered sources and gives you a way to see them as a single, unified whole. That means collecting logs from multiple systems, parsing them into a readable format, and setting up real-time monitoring so that you get notified when something actually requires your attention. Clarity over chatter, in other words.

The Fundamentals of Log Monitoring

If your log monitoring is set up properly, you'll find a few key things in place. You don't have to be a log management expert, but it helps to be familiar with the various options and to know what you're looking for.

1. Log ingestion is how everything begins. You have a system to ingest logs from all your different sources. PRTG supports several ingestion methods, including:

- Syslog collection: PRTG's Syslog Receiver sensor, set to listen on port 514, for capturing logs from network devices, firewalls, and Linux servers

- Windows event monitoring: Event Log sensors for local Windows event logs or WMI Event Log sensors for remote monitoring across multiple Windows servers

- Custom log formats: Script v2 sensors or Python Script Advanced sensors (now depreciated though, so best use the Script v2) to parse application-specific log formats that don't conform to standard log types

- Direct file monitoring: PRTG file sensors to monitor specific log files for performance counters or application-specific events

2. Parsing and correlation is the next step. Raw log files can be unruly, and each different type of system outputs an entirely different format. You need to extract meaningful information, such as IP addresses, usernames, error codes, and timestamps, and normalize the data to make cross-system event comparisons. Patterns begin to emerge at this stage. A flood of failed logins from a single IP address. Bottlenecks occurring at the same time across multiple application servers. Correlated security events and configuration changes.

3. Aggregation helps you manage log volume. You don't want to receive an alert for every single log entry, so group related log entries:

- Track patterns over individual events (500 failed logins in 5 minutes vs. every single attempt)

- Compress older logs or archive to less expensive storage while retaining recent logs in easily-accessible formats

- Establish thresholds that make sense for your environment so you are alerted to important items

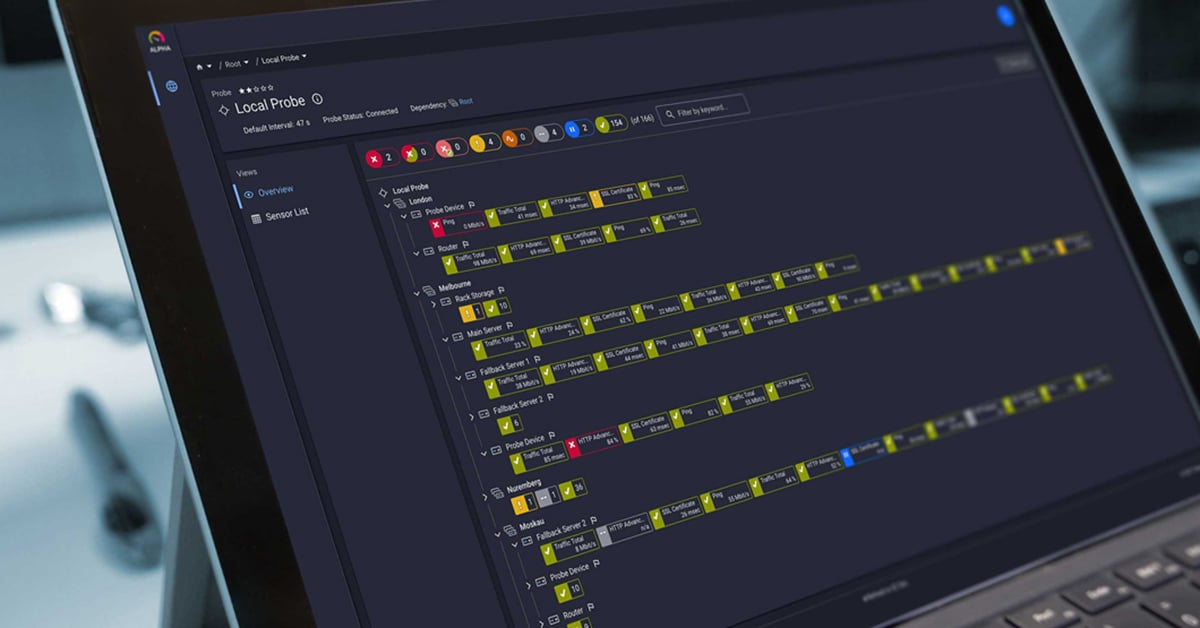

4. Visualization and dashboards make all that log data actionable. Real-time dashboards provide a high-level view of the current state of your infrastructure. When something is broken, you can drill down into specific log entries, filter by severity or source, and use that information to troubleshoot. PRTG's built-in dashboards provide you with preconfigured log visualizations, but you can also build custom dashboards to combine log data with other performance metrics for a holistic view of what's happening.

Three awesome PRTG Sensors for Server Log Monitoring

Lets focus a bit more on some of those sensors I mentioned - PRTG gives you a few different options for getting logs into the system. Which sensors you use depends on your environment and what you're trying to accomplish. There are three core sensors that will cover most log monitoring scenarios:

Syslog Receiver Sensor

This is your bread and butter if you want to do any kind of centralized log monitoring. This sensor sets up a Syslog server listening on a given port (typically 514) and processes any logs coming in from your network devices, firewalls, Linux servers, and anything else that sends syslog messages. You can filter on message severity, source, or content, and set up alerts based on specific events or strings in log messages.

This is your best bet for setting up a centralized log aggregation point where you pull logs from multiple different systems all in one place.

Event Log (Windows API) Sensor

Windows machines have a long history of leaving logs lying around for someone to read. The Windows Event Log sensor watches Windows Application, System, Security, DNS Server, and other log files directly using the Windows API.

It's a really clean, efficient way to monitor Windows security events, application errors, or general system warnings without needing to parse plaintext text files.

WMI Event Log Sensor

Similar to the Windows Event Log sensor above, but this one uses WMI to remotely monitor Windows systems.

This gives you a bit more flexibility to monitor event logs across multiple Windows servers from a central PRTG installation. Same filtering options, but great for environments where you need to monitor logs on dozens or hundreds of Windows machines without installing agents on each one.

Use Cases: Where Log Monitoring Saves Your Bacon

Security threat detection. It's the big one these days (or so I've heard).

- Firewall logs, authentication systems, access logs, all of these are recording who's trying to get into your network and whether they're actually succeeding

- The key is being able to correlate these security events as they happen so that you know a brute-force login attempt is underway, some new person logged in from a previously unknown location, or an attacker is moving laterally across your network after getting in

- Syslog Receiver sensor can collect logs from your firewalls and security appliances, while Event Log sensors can monitor Windows security audit events

- Thresholds for failed login attempts, set up properly, and you'll get a notification before someone actually finds their way in

Application performance monitoring is another big use case.

- "The application is slow" is an unhelpful complaint from a user or manager. You need to know why. Slow database query? Memory leak in the application? Network latency between services?

- Correlating application logs with server performance counters can let you spot the bottleneck and fix it before it becomes a user experience issue

- PRTG's file sensors can monitor the actual application log files to catch the errors in flight

- Or you can use custom script sensors to parse those application log formats and extract the key performance metrics

Infrastructure monitoring and troubleshooting becomes a million times easier when all your logs are centralized.

- Ever had a Kubernetes pod keep crashing and you have to SSH into nodes and run kubectl commands trying to get to the logs before the pod restarts?

- With centralized log monitoring, all of those logs have already been collected, indexed, and made searchable

- You can trace the lifecycle of a single Kubernetes pod, see all the errors it was throwing, and debug the issue without running around playing whack-a-mole with ephemeral containers

Building a Scalable (and Realistic) Workflow

The truth is that you don't and can't monitor everything all the time. The log volume generated by a modern IT infrastructure would bring any system to its knees if you tried to capture everything at max verbosity and log levels. It's just not sustainable.

Be strategic about what you collect, how long you keep it, and when to alert. Start by identifying your critical log sources. Critical systems, high-risk areas where security threats are most likely, and what applications most end-user tickets revolve around? Focus your log monitoring efforts there first, then expand from that.

Set up real-time monitoring and alerting for high-priority events: failed authentication, application crashes, security policy violations, etc. These should immediately trigger notifications. Use PRTG's notification system to send emails, text messages, or integrate with your existing incident management workflow. Less time-critical events can be batched up into daily or weekly reports that you review manually.

Think about log retention from the start. How long do you actually need to keep logs around for compliance reasons? What's your storage budget? Maybe you keep detailed logs for 30 days, summary logs for 90 days, and then just specific security event logs beyond that.

Don't neglect automation either. Manual log review is just a recipe for burnout. Set up filters to automatically tag logs by severity. Correlation rules can help connect related events across different log sources. Dashboards that surface your most critical metrics.

Making Logs Work for You, Not Against You

Server log monitoring is the process of turning chaos into clarity. All your systems are already generating the data you need to understand what's going on, troubleshoot when things break, and detect security threats. The question is whether you have the right tools and processes to actually use that data.

You don't have to reinvent the wheel here. Start small. Pick one critical system or application and focus on setting up centralized log collection and a dashboard showing you the key metrics that matter to that service. Build up and expand as you see what works and what you can use.

PRTG's sensor-based architecture makes this whole process practical. You can use a combination of Windows Event Log sensors to monitor Windows event logs, syslog sensors to collect logs from network devices and servers, and then roll your own custom log parsers for your specific applications if you need to.

Start monitoring in minutes, then expand as your infrastructure and needs grow. There's no need for a perfect, enterprise-wide log monitoring solution on day one.

Ready to get a handle on your logs? Download PRTG for free, no credit card required, and set up your first log monitoring sensors today, and see if it works for your environment.

Published by

Published by