What is observability? And what do World War II planes have to do with it? In the very first episode of The Monitoring Experts podcast by Paessler, we get into these questions (and much more). You can listen to the full discussion, or read on below to find out how Greg Campion, our monitoring expert for the episode, defines observability.

What is observability?

Greg Campion, who has worked in both IT and software development, has a lot of thoughts about observability. So much so that he wrote a blog post about it way back in 2019 and spoke about it in a video about the future of infrastructure.

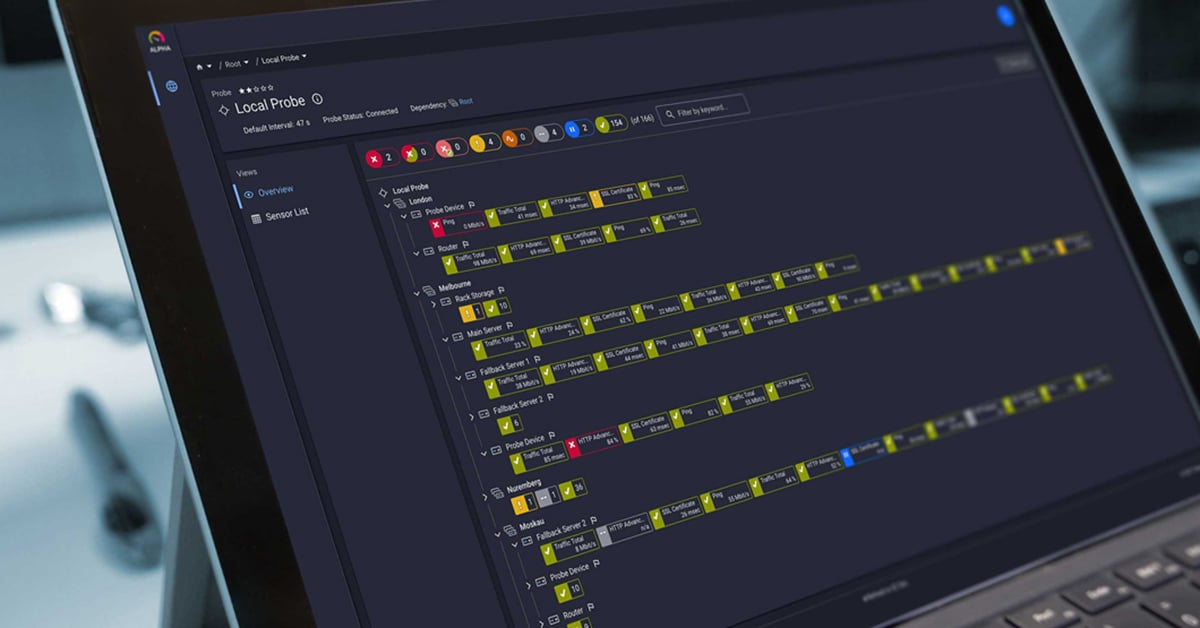

So how does he define observability? For Greg, it’s about taking in as much of a system as you can without looking at something specific, so that you can make conclusions about what’s going on in the system.

“I think...what's behind that term is just the ability to observe things from a much wider perspective,” says Greg. He later clarifies that an observable system, when built properly, is a system where you can constantly ask new questions and be able to analyze and dig through the data to find the answers you need. Specifically, it’s about discovering the unknown unknowns, which are typically the things that are going to bring down your systems. As Greg says, they are “things that nobody thought would ever happen, which is this confluence of like three or four different things that happened at the same exact time.”

Avoiding survival bias with observability

Observability can also help avoid survival bias when it comes to monitoring systems. To explain this, Greg uses the example of how Americans repaired planes that came back from missions in Europe during World War II. They analyzed the damage on the returning planes and discovered that there was a pattern of bullet holes in the fuselage, but not in the engines of the planes.

So where would you put extra armor on the planes? What seems to be the most logical answer is: put more armor on the parts of the plane with the most bullet holes. But this is survival bias. These planes actually survived, which suggested that damage to the fuselage was, in fact, not critical. Assuming that bullet damage would have been evenly spread across the planes, why was there a lack of bullet holes on the engines of the returning planes?

From there, the engineers made the assumption that the planes that did not make it back had taken damage to the engines – so that was where the extra armor went. In case you're interested in reading the story, it is beautifully told here: Abraham Wald and the Missing Bullet Holes

And that’s what observability helps you do – you’re not just monitoring for the things that you know can go wrong, but are rather watching for the unknown unknowns.

As Greg explains, you’re basically collecting every piece of data possible, including logs, traces, monitoring metrics and other data, so that you can “immediately identify, okay, something is happening or something happened, and then you can go in and you can make intelligent queries.”

Subscribe to the podcast

Greg also covered a lot more in his explanation of observability, including how it has developed over the years and how it differs to monitoring, so take a listen here. And subscribe to The Monitoring Experts podcast to get more monitoring deep dives, best practices and insights from all kinds of monitoring experts in different fields.

Published by

Published by