I've spent over a decade in network security, and here's something I've learned the hard way: breaches rarely announce themselves with obvious alerts. Most security incidents start with subtle anomalies in network traffic that seem insignificant - until suddenly they're not. I remember one financial services client who dismissed unusual authentication patterns as "just IT testing something" only to discover a week later they'd been thoroughly compromised. By the time everyone notices, you're already in damage control mode. I've watched companies struggle through the aftermath: critical systems offline during peak business hours, sensitive data exposed, and those awkward conversations with executives about compliance violations nobody saw coming.

The good news? Network anomaly detection technology has improved tremendously. No more just conducting post-mortems to figure out what happened. Today's tools can identify potential threats in real-time while there's still time to intervene. In this guide, I'll share practical insights, detection methods that actually work in production environments, systems that balance sensitivity with practicality, and tools you can implement to protect your network without needing a security team the size of a small army.

Network anomaly detection techniques using machine learning

Let's talk about how these systems actually determine what's "normal" on your network - though "normal" is always a bit of a moving target. It starts with statistical methods that examine your historical network data to establish baselines - essentially creating a fingerprint of your typical network behavior. Some approaches use straightforward fixed thresholds (anything over X gets flagged), while others employ more sophisticated statistical models. For IT teams, this means getting actionable alerts when something truly unusual happens - like traffic surging during off-hours or access patterns that don't match your organization's typical workflow.

This is where machine learning really shines compared to traditional approaches, though it's not without its own challenges. Unsupervised learning algorithms (including K-means clustering and autoencoders, if you want to get technical) can identify patterns without needing someone to label examples beforehand. These anomaly detection techniques essentially group similar network behaviors together and flag anything that doesn't fit the pattern. The practical benefit? Your security team spends less time investigating false alarms and more time addressing actual threats.

But what if you've already seen these attacks before? When you've got labeled datasets from previous incidents, supervised machine learning becomes your best friend. These algorithms learn from your historical data - they've seen what anomalies looked like before, so they know what to look for now. Neural networks and decision trees (among other approaches) sift through mountains of network data to spot subtle patterns that might indicate something fishy. I've seen security teams cut their incident response times in half after implementing these systems, catching potential breaches before they escalate into full-blown crises.

In my experience, the most effective security strategies don't rely on just one approach - they combine multiple methods into what we call hybrid systems. AI anomaly detection keeps getting smarter, and real-time network anomaly detection via machine learning has come a long way in recent years. Organizations implementing 5 monitoring strategies for cyber security in OT can significantly strengthen their defenses with tools like PRTG Network Monitor. These systems adapt as your network evolves and as new threats emerge - giving you scalable protection that works across even the most complex environments, though you'll still need skilled people to make the most of them.

Network anomaly detection tools and implementation

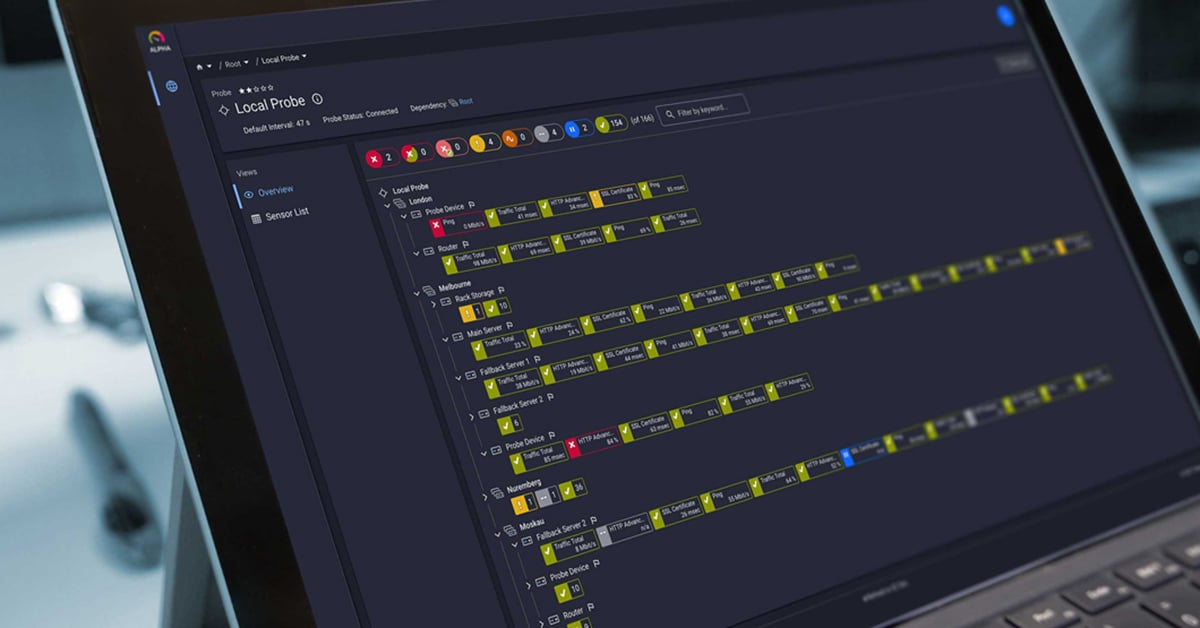

When shopping for anomaly detection tools, what features should top your list? First, look for solutions that give you visibility across your entire network - from traditional IT to operational technology (OT) environments. You'll want hybrid monitoring capabilities that bridge these historically separate worlds.

Next, think about deployment flexibility. Do you need on-prem? Cloud? Both? I've seen too many companies buy solutions that don't fit their actual infrastructure needs. And don't overlook alerting capabilities - nothing worse than a great detection system that sends critical alerts to an email inbox nobody checks! Make sure you can route notifications where they'll actually get seen.

Look, bolting new security tools onto your existing stack is usually a nightmare, but it doesn't have to be. Your anomaly detection system needs to play well with firewalls, SIEM systems, and whatever threat intel you're using. Without this integration, you'll end up with security data scattered across multiple dashboards - I've been there, and trust me, nobody has time for that kind of context-switching during an incident.

What makes PRTG Network Monitor interesting is how their sensors work. Instead of a one-size-fits-all approach, they've built different sensors for specific monitoring needs. Some watch bandwidth (because who hasn't had that mysterious network slowdown?), others keep tabs on access patterns, and they even have specialized ones for IoT devices - which, let's be honest, are security nightmares waiting to happen. This isn't just marketing fluff - having the right sensors in the right places makes the difference between actionable alerts and drowning in noise. The beauty is you can mix and match to fit what your network actually looks like, not what some vendor thinks it should be.

When it comes to implementation, don't let perfect be the enemy of good. Start by figuring out what actually matters in your network - where's your sensitive data, what systems would cause real pain if compromised? Map that out (even a whiteboard sketch helps), then start placing sensors strategically. If you're dealing with industrial systems, check out a packet too far: passive monitoring for OT networks - it's a lifesaver for monitoring without disrupting those finicky operational technologies. I always tell clients to focus on protecting their most critical assets first, then work outward. Rome wasn't built in a day, and neither is good security monitoring.

And please, don't fall for the 'set it and forget it' myth. These systems need ongoing attention - something vendors conveniently downplay during sales pitches. You'll need to regularly tweak those detection rules and thresholds because what works today might generate a flood of false positives tomorrow. The threat landscape keeps changing, and your monitoring needs to evolve with it. What worked last quarter might miss today's emerging attack patterns.

Document your response procedures too - not the perfect, comprehensive runbook that no one will ever read, but practical steps for your team to follow when bleary-eyed at 2AM. Tools with AI anomaly detection capabilities can help by adapting to your network's changing patterns, but they still need human oversight to be truly effective. I've seen too many organizations with great detection capabilities but chaotic response processes that fall apart under pressure.

Conclusion

Here's the reality I've seen over and over in my consulting work: as networks grow more complex, finding genuine threats hidden in normal traffic patterns becomes nearly impossible with traditional tools. Most security teams aren't struggling to collect data - they're drowning in it.

That's why network behavior anomaly detection has become so critical. Not because it's another security buzzword (heaven knows we have enough), but because it fundamentally transforms how you protect your infrastructure.

The most successful organizations I've worked with have made this shift from exhausting reactive firefighting to proactive threat hunting. They identify potential issues before they impact critical network operations. But this requires building a sustainable approach that evolves with your environment - one that can process big data streams in real-time while adapting to emerging threats like botnets that rule-based systems consistently miss. The right anomaly detection tools don't just improve security - they create breathing room for your team to focus on strategic initiatives instead of constant firefighting.

Curious to see if this approach might work in your environment? Get a free trial of PRTG Network Monitor and experience these detection capabilities without committing to a purchase.

Frequently Asked Questions

How can I determine which network anomaly detection approach is right for my organization?

After 15+ years in this field and countless client implementations, I've stopped giving one-size-fits-all answers to this question. Anyone who tells you there's a perfect solution probably has something to sell you.

The foundation of any good detection strategy starts with knowing your network inside and out. How network discovery strengthens your cybersecurity risk became crystal clear to me when a healthcare client's "complete" inventory missed nearly 200 IoT devices, all connecting to their clinical systems without proper security controls. You simply can't protect what you don't know about.

For smaller teams or those new to anomaly detection, don't overthink it. Statistical methods are your friend. They won't win innovation awards, but they're practical and don't require a data science team. I worked with a mid-sized retailer who started with simple statistical baselines and caught an emerging ransomware attack before it spread beyond two endpoints. Sometimes the basics just work.

When you're protecting more complex environments or facing sophisticated threats, machine learning approaches offer better detection capabilities. But be realistic about what it takes to implement them successfully.

Whatever approach you choose, start with looser thresholds than you think you need. You can always tighten them as you learn, but getting flooded with false positives on day one is the fastest way to ensure your team starts ignoring alerts altogether. I learned this lesson the hard way at a financial services firm where we had to temporarily disable a too-sensitive system because it was generating hundreds of false alarms daily.

If you're just getting started with anomaly detection, understanding your network is step one. See how network observability for enhanced network performance creates the foundation for any effective security strategy.

What are the common challenges when implementing network anomaly detection in hybrid IT/OT environments?

This is where things get tricky. IT and OT networks are like different species that don't naturally communicate well. Implementing hybrid monitoring approaches that bridge these worlds requires careful planning. OT networks often run on legacy protocols and specialized equipment that your standard IT monitoring tools just can't make sense of. Plus, many industrial systems are incredibly sensitive.

Establishing accurate baselines is another challenge since OT networks typically have more predictable traffic patterns but absolutely zero tolerance for false alarms. And then there's the organizational challenge: IT and OT teams often operate in completely different worlds with different priorities and terminology. Breaking down these silos is often harder than the technical challenges.

For organizations with both IT and OT environments, check out these 5 monitoring strategies for cyber security in OT that address these unique challenges head-on.

How long does it take for network anomaly detection systems to establish reliable baselines?

Don't let vendors sell you on overnight miracles, establishing reliable baselines takes time. How much time? It varies wildly depending on your network complexity and the detection methods you're using. Simple statistical approaches might give you something usable within days, but they'll miss a lot of nuance and probably flood you with false positives. Machine learning systems typically need 2-4 weeks of 'normal' network data to create initial baselines, with continuous refinement happening over the following months. Factors that complicate the timeline include network size, traffic volume, seasonal business patterns (like month-end processing spikes), and how frequently you make legitimate network changes.

My advice? Start with conservative thresholds and gradually tighten them as the system learns your environment's unique patterns. For industrial environments, a packet too far: passive monitoring for OT networks can significantly reduce the learning curve.

Want to accelerate the baseline process without sacrificing accuracy? See how Paessler PRTG's predictive and proactive AI features can help you get there faster.

Published by

Published by