We live in an age of irresistible Al hype. Artificial intelligence has not only defeated the world's best Go players*, but has revolutionized our healthcare industry as well as the way we find knowledge. And as if that weren't enough, the insatiable AI monster also tries its digital hand as an artist. But what if AI has been using us for a long time to become more intuitive and human-like, and the big tech companies are doing a brilliant job of hiding it?

The folks at The Verge published a video in mid-June that deals with the topic of artificial intelligence deploying a sobriety that is soothing. The key points of this report can be summarized as follows:

- Whenever you use a CAPTCHA, you train AI in some form through labeling.

- If you use a service like Expensify, then often it's not a program that reads your receipts, but (poorly-paid) people.

- Some companies deal with this quite openly, others tend to deny it.

The question of whether CAPTCHAs and AI Training go hand in hand was posed by someone on Reddit in mid-2017 and TechRadar reported about it in early 2018. That providers such as Expensify are resorting to human help is not really new as well, and was for instance reported by WIRED at the end of 2017.

However, the links between supposedly intelligent programs, their training and human labor are interesting, and three different options emerge:

- Option 1: We are shown an image or other file, for example with a CAPTCHA, and take over the assignment. We decipher an unclear text, select images with certain contents, or something similar. We do the labeling, and the image (or other file), together with the label, serve the AI for a future assignment. Now one can of course object that in the context of a CAPTCHA it must already be determined at the beginning what the "correct selection/answer" is. But AI training essentially works through repeated learning of patterns, and who says that the CAPTCHA already knows (all) the right answer(s) with 100% certainty? And if you are asked to type two unclear words, one of them could be the actual CAPTCHA, and the second could be a street name from Google Street View or a text fragment from a book that was not readable for the AI.

- Option 2: This is a turk-ish situation, which is actually a very annoying case and refers to "The Turk" (see below). It has nothing to do with Turkey or a turkey. To the outside, a certain performance is presented as a machine result, whereas in the process humans were either partially or even completely involved. If you feel like taking over the work of machines, which then collect all the praise, you could register at Amazon Mechanical Turk. Then you can solve tasks for a few cents, such as typing words that appear in a document. Sounds like fun.

- Option 3: A combination of the two previous options which can be found in more complex projects.

i

Wait... What? 😵

Here are two short explanations that you can skip if you're super smart:

- CAPTCHA stands for "completely automated public Turing test to tell computers and humans apart" and this security check was developed to put a stop to spam and the unwanted use of web services by automated bots and to clearly distinguish humans from machines. reCAPTCHA is a CAPTCHA service that has been operated by Google since 2009. reCAPTCHA is also designed to digitize books as well as street names or house numbers from Google Street View.

- "The Turk" was a (mechanical) illusion that allowed a human chess master hiding inside to operate the machine. It was constructed in the late 18th century by Wolfgang von Kempelen to empress Maria Theresa of Austria. The guy who played chess incognito in the machine was quite good and, among others, defeated Napoleon Bonaparte and Benjamin Franklin. The unusual name of the machine refers to the oriental clothing of the figure. Why it was dressed in oriental attire doesn't make any sense today; seems like Europe was a really strange place back then. Naturally, Amazon has referred to this illusion with its Mechanical Turk or "MTurk".

AI will of course continue to improve and at some point be able to do entirely without human help. The fact that we have not yet reached this step is reassuring and at the same time unsettling, depending on your point of view.

i*On the subject of Go... just a brief defense of mankind:

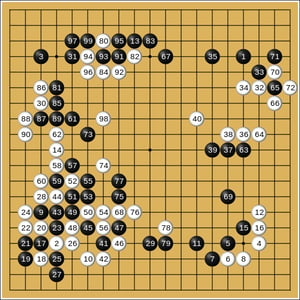

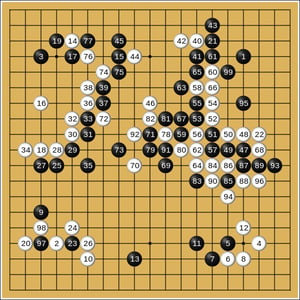

The human Go players are not as blatantly inferior as has been reported. In the match between AlphaGo and Lee Sedol from the year 2016, which Lee Sedol lost after 5 games with 4:1, remarkable things happened. On the part of Artificial Intelligence, a combination occurred in the course of preparation: On the one hand there was the deep neural network, and on the other hand something called "reinforcement learning". If you understand the ancient board game Go, you will see a subtle beauty in two moves.

- 🤖 Firstly in game 2, AlphaGo (a program developed by Google DeepMind) calculated a move at move 37 that had a probability of 1:10,000 of a human playing it. This turned out to be a brilliant idea and something that a human would have never expected. Lee Sedol couldn't accommodate it and lost.

- 👨 Secondly in game 4, Lee Sedol (playing white) also played a move that had a probability of only 1:10,000 of being played. AlphaGo wasn't programmed for the brilliant, unusual and unlikely move 78. It got confused, answered with nonsense moves afterwards and virtually self-destructed itself. Lee Sedol won game 4.

🥳 Even in the age of powerful and comprehensively skilled AI, mankind has a chance at its own transcendent moments.

Could proving that you're not a robot help robots to act like humans? How do you feel about that? Tell us in the comments. And make sure to fill out the reCAPTCHA. Google thanks you in advance.

Published by

Published by

.jpg)