Network latency can make or break user experience. Gamers feel every millisecond of delay; video conferencing teams struggle when jittery lag ruins every session; business application users watch productivity tank as they wait for slow responses.

You've probably spent more time troubleshooting network issues than you would like. Worst of all? End-users do not care about your router settings or bandwidth. They only care that things are supposed to work fast and often do not.

Here's the thing about network latency: it's not always about throwing more bandwidth at the problem. We work with customers whose networks boast Gigabit symmetrical internet but struggle with poor response times for users. We see clients with low throughput connections who consistently achieve low latency rates. Understanding the difference and knowing which levers to pull can save you countless hours of troubleshooting and actually improve application performance where it matters.

Network Latency Explained

Before you can fix latency problems, you need to understand what you're actually dealing with. Network latency is the time delay experienced when transferring data over a network. Technically speaking, it measures the time taken for a data packet to travel from source to destination. Latency is normally given in milliseconds.

When discussing round trip time (RTT) latency, we are referring to the time taken for the packet to reach its destination and make its return trip. It is the delay experienced between clicking "send" and seeing the response; it does not measure how fast data can flow through a pipe once the transfer starts.

Latency often gets confused with bandwidth. The average consumer is not aware of how these differ and how both can exist in high or low degrees simultaneously. Bandwidth is the width of a pipe. It determines the volume of data that can be transferred at one time. Latency is how long it takes that data to make its journey.

It is entirely separate from the size of the pipe. For instance, your bandwidth could be 1Gbps and latency at 150ms simultaneously. That is why your internet speed test will show high Mbps values while video chats fail or lag.

Common Causes of High Network Latency

Several factors contribute to network latency. When you experience high latency, most reasons can be traced back to these elements:

- Physical distance between the source and destination: The latency will always be higher for distant locations, partly because there's a hard limit set by the speed of light through fiber optic cables.

- Transmission medium: Ethernet connections are faster than Wi-Fi connections because they don't have as much interference and extra protocol overhead.

- Network congestion: If there's too much traffic, it can create a bottleneck, which increases latency even if your bandwidth seems sufficient.

- Processing delays: Delays at routers, including delays due to firewall inspection and CPU load on end devices, result in additional round-trip time, which adds to the overall application delay.

How to Reduce Latency On Your Network

Now that you know what causes latency, let's talk about fixing it. Improving network latency performance is not as simple as pressing a button. You must know where the delays are to fix them. You should also know where to prioritize your time. Then you must put effort into making improvements that will help your users. These actions move the needle when optimizing your network.

Measure Your Baseline

Optimization is impossible if you do not know your starting point. Gut instinct about network performance is unactionable. Use a ping test to get a baseline to get the RTT for crucial network pathways. Measure jitter, or the variation of latency over time.

Even with average latency that appears acceptable, jitter will cause real-time traffic issues. Monitor packet loss as retransmissions cause additional delays which will compound your latency problems. Keep historical records and track against these to see if your changes make improvements.

Upgrade Your Network Infrastructure

Once you have baseline measurements, your network infrastructure deserves a hard look. Older routers and switches can introduce significant processing delays. If your equipment is several years old and struggling with throughput, consider whether processing capabilities match your current network demands. Choosing new equipment that supports modern networking protocols and has more processing power will help reduce latency issues.

Degraded or damaged ethernet cables can introduce latency. If you're using older Cat5 cabling, consider upgrading to Cat5e or Cat6 for better performance and reliability, especially for Gigabit connections.

Test switching from Wi-Fi to a wired connection for mission-critical systems as Wi-Fi latency is usually higher and more inconsistent than ethernet connections. Industry best practices consistently show that wired connections provide lower latency than wireless.

Optimize Your Network Paths

Beyond your local infrastructure, the path your data takes matters just as much. The fewer the number of hops between endpoints, the lower the latency. Every router or switch adds a processing delay for that additional hop. Collaborate with your ISP about routing optimization. If you are experiencing low performance to certain destinations, work with your internet provider to find better routing.

Content delivery networks (CDN) will cache content closer to end users, which can significantly reduce latency by decreasing the physical distance data needs to travel. If you are an organization that has more than one location, direct peering arrangements help you bypass heavily used public internet pathways.

Implement Quality of Service (QoS)

Not all of your traffic is the same. Quality of Service settings on your routers should be used to prioritize the data that is more important to your operations. The following table prioritizes traffic according to different levels.

| Priority Level | Traffic Types |

|---|---|

| High | VoIP calls, video conferencing, online gaming, real-time collaboration tools |

| Medium | Standard web browsing, email, business applications |

| Low | Bulk file transfers, system backups, software updates |

Configure your routers to make real-time application traffic a priority over background traffic. This will not reduce your overall latency, but it will guarantee that latency-sensitive traffic gets priority during periods of congestion, ensuring critical applications stay responsive even when your network is busy.

Use Caching Strategically

Another powerful technique to reduce perceived latency is caching. You should cache whenever possible. This will remove network latency from critical paths. Every request that can be served from a local cache rather than travelling all the way to a remote server removes the network latency from that request entirely. Web proxy cache, DNS cache, application cache. Cache as much as you possibly can. The first request will still be slow. Subsequent requests will be much quicker.

Fix Specific Bottlenecks

Last but not least, address bottlenecks systematically. If firewall inspection adds latency, optimize your rule set, or upgrade to more performant firewall hardware. If CPU load on a server is the problem, fix that server performance issue. If you are seeing latency spikes during backup windows, either schedule those jobs for off hours or shape the traffic so that your backups do not consume all available bandwidth.

Monitoring Network Latency with PRTG

All these optimization strategies share one critical requirement: you need visibility into what's actually happening on your network. You can't improve what you don't monitor. Manual ping tests do not give you a holistic view of the issue, they just provide snapshots.

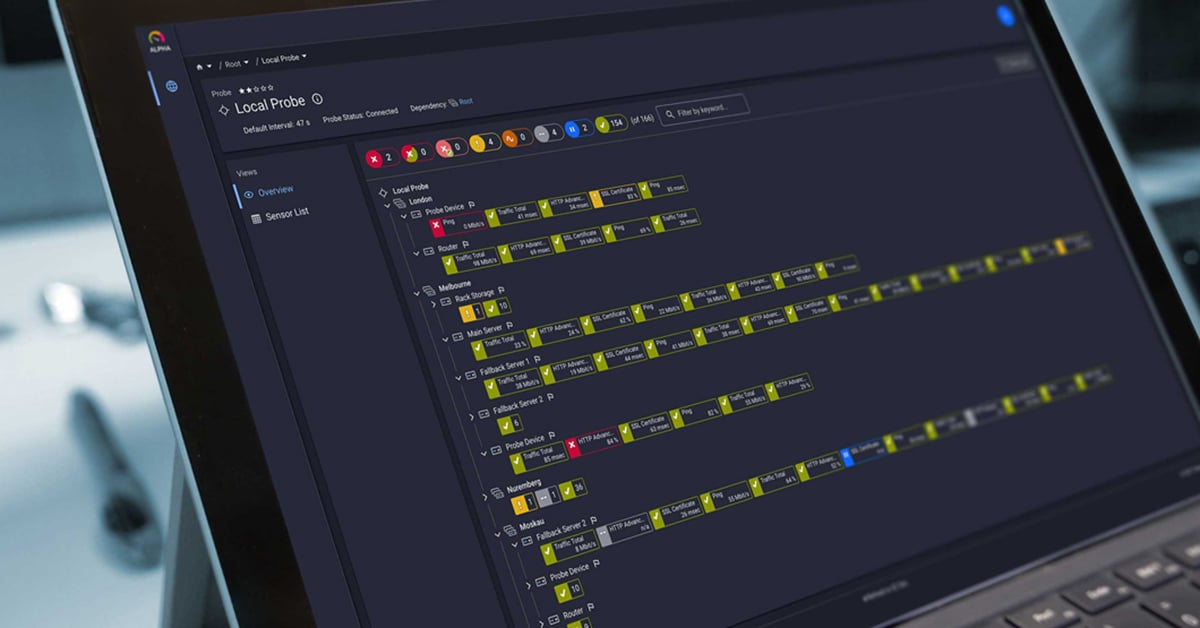

Paessler PRTG Network Monitor provides continuous visibility into network latency across your entire infrastructure, helping you spot problems before end-users complain.

Basic Latency Monitoring with Ping Sensors

PRTG comes with a ping sensor that measures response time and packet loss for any device on your network. The Ping Jitter sensor specifically tracks jitter and other statistical variations in response times, making it useful for troubleshooting VoIP quality or intermittent network issues that are difficult to pin down with a basic ping test.

Set up sensors for your critical network paths and configure alerts to be notified when response times exceed acceptable thresholds.

Geographic and Distributed Network Monitoring

For distributed networks, our Cloud Ping v2 sensor measures TCP ping times from many locations around the world. This gives you visibility into how users in different geographic regions experience your networked services. Use this to pinpoint regional latency issues and validate the performance of your CDN.

Quality of Service Monitoring for Real-Time Applications

Our Quality of Service sensors measure jitter, packet delay variation, packet loss, and other parameters critical for real-time applications. These sensors send UDP packets between two PRTG probes, allowing you to measure the actual quality of a network connection between specific endpoints.

For VoIP deployments, PRTG can monitor Cisco IP SLA metrics, giving you MOS (Mean Opinion Score) and ICPIF (Impairment Calculated Planning Impairment Factor), industry-standard metrics for assessing VoIP call quality.

Historical Data and Trend Analysis

We strongly recommend that you make use of PRTG's Historical Data function. Your network latency and other metrics are stored in PRTG, allowing you to identify trends. Are latency spikes worse during the day due to congestion? Are there problems at specific times which correlate with backup windows or other scheduled tasks?

This historic data provides context to your issues that helps you decide if you're dealing with a chronic problem that needs infrastructure investment or a periodic issue that can be remediated through better network management.

Common Latency Questions, Answered

Q: I have a fast internet connection. How do I reduce my network latency without upgrading?

A: Upgrading your internet connection is not the answer in most cases. Many latency issues have nothing to do with the speed of the connection itself. They are all down to network congestion within your local network, inefficient routing, Wi-Fi problems, or the lack of traffic prioritization.

There is no point in upgrading your internet speed if the latency is within your network before the traffic leaves for the ISP. Start by measuring. Once you know where the delays are, then focus on what you can do to fix it. Local network infrastructure, caching, and traffic prioritization are all optimizations that can help.

Q: How much latency is acceptable?

A: As in all cases, it depends on what you are using your network for. For example, the following table gives you a better indication of what would be acceptable network latency for different applications.

| Application Type | Target Latency | Notes |

|---|---|---|

| Online Gaming (Competitive) | < 50ms | Critical for real-time responsiveness |

| VoIP | < 150ms (acceptable), < 100ms (good) | Anything over 150ms starts to become annoyingly laggy |

| Video Conferencing | < 150ms | Can tolerate slightly higher latency but is very sensitive to jitter |

| Web Browsing | 100-200ms | Lower is always better for user experience |

| Database Applications | Highly variable | Some apps run over high-latency links. Others will require sub-10ms |

Benchmark for what you need, monitor whether you are delivering on your service levels, and then optimize with data on whether your changes are helping you.

Q: My network latency seems to spike at certain times. What is happening?

A: Periodic latency spikes often mean that you are experiencing network congestion. This is when multiple streams of traffic try to use the same network path at the same time. Routers and switches must queue packets, adding further delay to round trip time. Scheduled backups are a common culprit. These create significant bandwidth use that network paths may not have been provisioned to handle. Peak usage hours when multiple users or applications are accessing the network at the same time will also cause latency.

Specific applications that cause traffic bursts and external issues such as backbone congestion at your ISP can be problems as well. Network monitoring can help you tie latency spikes to specific events. By correlating latency to traffic patterns or specific times, you can implement traffic shaping or schedule changes to ensure backups do not consume all the bandwidth during peak hours.

Where to Go From Here

Latency reduction is not as easy as flicking a switch. There are no magic bullets when it comes to performance. Instead, successful networks require an understanding of where time is spent and where it is most critical to your users. Systematic optimization and continued monitoring can ensure your performance stays within acceptable parameters.

If you are tired of troubleshooting latency problems when they arise, get ahead of issues by monitoring. Continuous visibility into what is happening will help you know where you should be making changes and the effect that those changes are making. With 30 days of full functionality for free, PRTG Network Monitor can help you see exactly where latency is occurring on your network before you make a purchasing decision. Download your free trial and start monitoring your network today to see what areas you can optimize.

Published by

Published by