Network latency can make or break your user experience. When data packets take too long to travel between points, you'll see dropped video calls, sluggish applications, and frustrated users. But here's the thing: you can't fix what you don't measure.

This guide walks you through everything you need to measure network latency accurately, from basic ping tests to continuous monitoring solutions. You'll learn which tools work best for different scenarios, how to interpret your results, and what to do when latency spikes.

What You'll Learn

By the end of this guide, you'll know how to:

- Measure round-trip time (RTT) using command-line tools

- Identify latency bottlenecks in your network path

- Set up continuous latency monitoring

- Troubleshoot high latency issues

- Establish baseline metrics for your network

Who this guide is for: Network engineers, systems administrators, and IT professionals responsible for network performance and troubleshooting.

Time investment: 15-20 minutes to read, 30-60 minutes to implement basic monitoring.

What Is Network Latency and Why Does It Matter?

Network latency measures the amount of time it takes for a data packet to travel from source to destination. It's typically expressed in milliseconds (ms) and directly impacts everything from video conferencing quality to application responsiveness.

There are two primary ways to measure latency:

Round-trip time (RTT): The time it takes for a packet to travel from point A to point B and back again. This is what most ping tests measure.

Time to first byte (TTFB): The time between sending a request and receiving the first byte of data back. This matters more for web applications and API calls.

High latency doesn't just slow things down. It causes packet loss, jitter (variation in latency), and poor user experience. For real-time applications like VoIP or video conferencing, latency above 150ms becomes noticeable. Above 300ms, it's disruptive.

What Tools Can Measure Round-Trip Network Latency?

You have several options for measuring network latency, each with different strengths:

Ping: The simplest tool, available on every operating system. Sends ICMP echo requests and measures response time. Great for quick checks but limited in depth.

Traceroute (tracert on Windows): Shows latency at each hop along the network path. Essential for identifying where delays occur.

MTR (Matt's Traceroute): Combines ping and traceroute, providing continuous measurements over time. Better for spotting intermittent issues.

Netperf and iperf: Measure throughput and latency under load. Useful for testing network capacity and performance under stress.

Wireshark: Packet capture tool that analyzes TCP handshakes and timestamps. Gives you the most detailed view but requires more expertise.

Network monitoring platforms: Tools like PRTG Network Monitor provide continuous, automated latency monitoring across your entire infrastructure.

The best approach? Start with basic command-line tools for troubleshooting, then implement continuous monitoring for proactive management.

Step 1: Measure Latency with Ping

Ping is your first line of defense for latency testing. It's built into Windows, Linux, and macOS, making it universally accessible.

How to Run a Basic Ping Test

On Windows:

ping google.com

On Linux or macOS:

ping -c 10 google.com

The -c 10 flag sends exactly 10 packets, then stops. Without it, ping runs indefinitely until you press Ctrl+C.

What the Results Tell You

A typical ping response looks like this:

Reply from 142.250.185.46: bytes=32 time=14ms TTL=117

Here's what matters:

- time=14ms: This is your round-trip latency. Under 50ms is excellent for most applications.

- TTL (Time to Live): Shows how many hops the packet can make. Not directly related to latency, but useful for troubleshooting.

- Packet loss: If you see "Request timed out," you're losing packets. Even 1-2% packet loss impacts performance.

Common Mistakes to Avoid

Don't test to just one destination. A single ping to google.com tells you about your internet connection, not your internal network. Test multiple endpoints:

- Your default gateway (router)

- Internal servers

- External websites

- Your ISP's DNS servers

Also, don't rely on a single ping. Run at least 10-20 packets to get an average. Latency varies, and one measurement doesn't show the full picture.

Pro Tip: Test Your Default Gateway First

Before testing external sites, ping your router's IP address (usually 192.168.1.1 or 10.0.0.1). If you see high latency here, the problem is on your local network, not your ISP or the internet.

Step 2: Identify Bottlenecks with Traceroute

Ping tells you there's a problem. Traceroute shows you where it is.

How Traceroute Works

Traceroute sends packets with incrementally increasing TTL values. Each router along the path decrements the TTL and sends back a "time exceeded" message when TTL hits zero. This reveals every hop and its latency.

On Windows:

tracert google.com

On Linux or macOS:

traceroute google.com

Reading Traceroute Results

You'll see output like this:

1 2 ms 1 ms 2 ms 192.168.1.1 2 12 ms 11 ms 13 ms 10.45.2.1 3 45 ms 89 ms 52 ms isp-router.net 4 14 ms 13 ms 15 ms google.com

Each line shows one hop. The three time values represent three separate probes to that hop.

What to look for:

- Sudden jumps in latency: If hop 2 shows 12ms but hop 3 shows 89ms, the problem is between those two points.

- Asterisks (*): Some routers don't respond to traceroute. This is normal and doesn't indicate a problem.

- Consistent high latency: If every hop after a certain point shows high latency, the issue is at that router or beyond.

When Traceroute Isn't Enough

Traceroute gives you a snapshot, but latency changes over time. That's where MTR (Matt's Traceroute) comes in. It runs continuous traceroutes and shows statistics over time, helping you spot patterns and intermittent issues.

On Linux:

mtr google.com

MTR isn't installed by default on most systems, but it's available in most package managers.

Step 3: Analyze TCP Latency with Packet Capture

For the most accurate latency measurements, analyze actual TCP connections. This is what Reddit users recommend when they need to measure "ACTUAL network latency" beyond simple ping tests.

The SYN-ACK Method

When a TCP connection starts, the client sends a SYN packet, and the server responds with a SYN-ACK. The time between these two packets is your true network latency.

You can capture this with Wireshark or tcpdump:

Using tcpdump on Linux:

sudo tcpdump -i eth0 'tcp[tcpflags] & (tcp-syn|tcp-ack) != 0'

This captures all SYN and SYN-ACK packets. Look at the timestamps to calculate the delta.

Why This Matters

ICMP ping uses a different protocol than your actual applications. Some routers deprioritize ICMP traffic, giving you misleading results. TCP-based measurements show what your users actually experience.

This approach is especially valuable when testing latency between two servers in the same data center or measuring end-to-end application performance.

Step 4: Set Up Continuous Network Latency Monitoring

One-time tests are great for troubleshooting, but they don't show you trends or alert you to problems before users complain. That's where continuous monitoring comes in.

What to Monitor

Don't just monitor latency. Track these related metrics together:

- Latency (RTT): Your primary metric, measured in milliseconds

- Packet loss: Percentage of packets that don't arrive

- Jitter: Variation in latency over time

- Bandwidth utilization: High traffic can cause latency spikes

- CPU and memory on network devices: Overloaded routers introduce latency

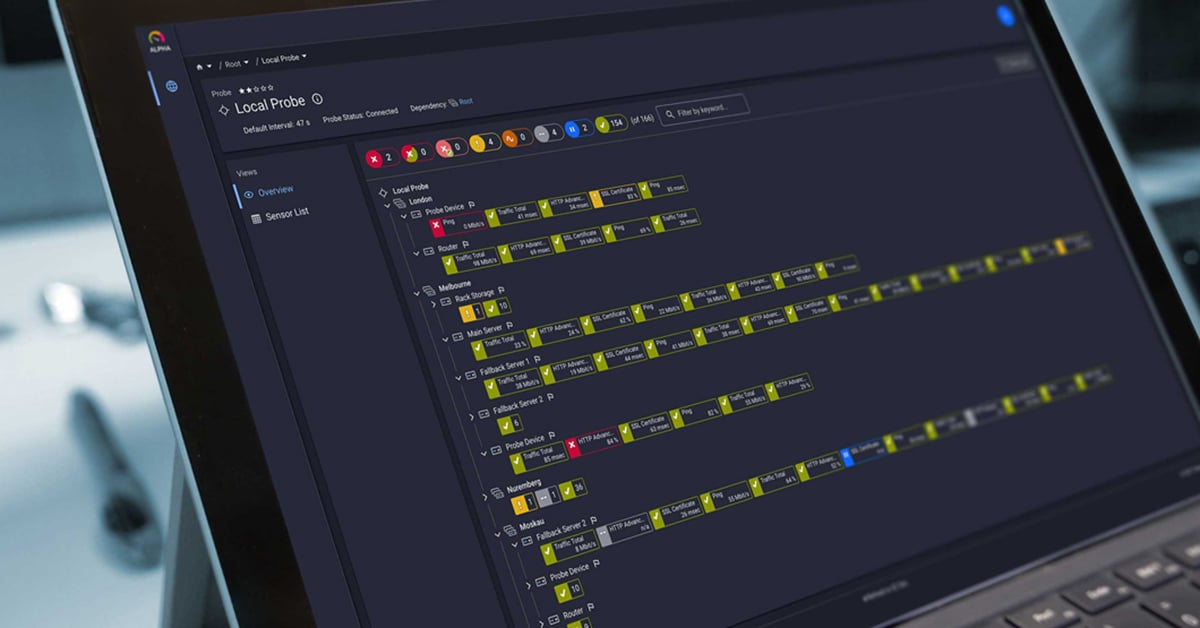

How PRTG Monitors Network Latency

PRTG Network Monitor provides automated, continuous latency monitoring across your entire network infrastructure. Instead of running manual ping tests, PRTG monitors all your QoS parameters 24/7.

Here's how it works:

Ping sensors continuously measure latency and packet loss to critical devices. You'll see real-time graphs showing latency trends over hours, days, or weeks.

Quality of Service (QoS) sensors track latency, jitter, packet loss, and Mean Opinion Score (MOS) for VoIP and video applications. If your video conferencing quality drops, you'll know immediately.

Flow monitoring (NetFlow, sFlow, jFlow) shows which applications and users consume bandwidth, helping you correlate latency spikes with traffic patterns.

Multi-vendor support means you can monitor Cisco, Juniper, HP, and other network hardware from a single dashboard, regardless of your network infrastructure complexity.

The key advantage? PRTG alerts you when latency exceeds your thresholds. You can fix problems during backup windows or off-peak hours, not during critical business operations.

Step 5: Establish Baseline Latency Metrics

You can't identify "high latency" without knowing what's normal for your network. Establishing baselines is critical.

How to Create a Baseline

Run latency tests at different times:

- Peak hours: When your network is busiest (usually 9 AM - 5 PM)

- Off-peak hours: Early morning or late evening

- Different days: Weekdays vs. weekends

Track these measurements for at least two weeks. Calculate:

- Average latency: Your typical performance

- 95th percentile: The latency value that 95% of measurements fall below

- Maximum latency: Your worst-case scenario

The 95th percentile matters more than the average. It shows what users experience during busy periods, not just ideal conditions.

Setting Alert Thresholds

Once you have baselines, set alerts at meaningful levels:

- Warning threshold: 150% of your baseline average

- Critical threshold: 200% of your baseline average or when latency exceeds application requirements

For example, if your baseline latency to a critical server is 20ms, set a warning at 30ms and critical at 40ms.

Advanced Techniques for Latency Optimization

Once you're measuring latency consistently, you can optimize it.

Optimize Network Routing

Sometimes packets take inefficient paths. Use traceroute to identify unnecessary hops, then work with your ISP or reconfigure routing protocols (BGP, OSPF) to optimize paths.

Implement QoS Policies

Quality of Service (QoS) prioritizes critical traffic. Configure your routers and switches to prioritize:

- VoIP and video conferencing (real-time traffic)

- Business-critical applications

- Interactive sessions (SSH, RDP)

Lower-priority traffic like backups and software updates can tolerate higher latency.

Monitor Latency to Cloud Providers

If you're running workloads in AWS, Azure, or Google Cloud, monitor latency from your on-premises network to your cloud resources. PRTG's cloud monitoring capabilities let you track latency to virtual machines and cloud services alongside your on-premises infrastructure.

Use Content Delivery Networks (CDNs)

For web applications, CDNs cache content closer to users, reducing latency. Monitor latency to your CDN endpoints to ensure they're performing as expected.

Troubleshooting Common Latency Issues

High Latency to External Sites Only

Symptom: Internal network latency is fine, but external sites are slow.

Likely causes:

- ISP congestion or routing issues

- Insufficient internet bandwidth

- DNS resolution delays

Solution: Test latency to your ISP's first hop. If it's high, contact your provider. If it's normal, the issue is beyond your ISP. Consider changing DNS servers or implementing a CDN.

Latency Spikes During Specific Times

Symptom: Latency is normal most of the time but spikes during backup windows or peak hours.

Likely causes:

- Network congestion from backups or heavy usage

- CPU overload on routers or firewalls

- Bandwidth saturation

Solution: Use network traffic monitoring to identify what's consuming bandwidth during spike periods. Schedule backups during off-peak hours or implement QoS to prioritize interactive traffic.

High Latency Between Servers in the Same Data Center

Symptom: Two servers on the same network segment show unexpectedly high latency.

Likely causes:

- Switch or router misconfiguration

- Spanning Tree Protocol (STP) creating inefficient paths

- Faulty network interface cards (NICs)

Solution: Check switch port statistics for errors. Verify STP topology. Test with different network cables or switch ports to rule out hardware issues.

Intermittent Latency Increases

Symptom: Latency is unpredictable, with random spikes that don't correlate with traffic patterns.

Likely causes:

- Wi-Fi interference (if wireless)

- Faulty network hardware

- Routing flaps or instability

Solution: Use MTR or continuous monitoring to capture patterns over time. Check for hardware errors on switches and routers. For Wi-Fi, analyze channel utilization and interference.

Latency Increases with Packet Size

Symptom: Small ping packets show low latency, but larger packets show higher latency.

Likely causes:

- Bandwidth limitations

- MTU (Maximum Transmission Unit) mismatches causing fragmentation

Solution: Test with different packet sizes using ping -l 1400 google.com (Windows) or ping -s 1400 google.com (Linux). If larger packets show proportionally higher latency, you're hitting bandwidth limits.

Frequently Asked Questions

What is the best way to test network latency?

For quick troubleshooting, use ping to test latency to your router, internal servers, and external sites. For accurate application-level measurements, capture TCP SYN-ACK packets with Wireshark or tcpdump. For ongoing visibility, implement continuous monitoring with a tool like PRTG that tracks latency, packet loss, and jitter 24/7.

How do you measure latency between servers?

The most accurate method is to run a packet capture on one server and observe the time delta between TCP SYN and SYN-ACK packets. Alternatively, use ping or specialized tools like iperf to measure round-trip time. For production environments, set up continuous monitoring to track latency trends over time.

What's acceptable network latency?

It depends on your application. For general web browsing, under 100ms is acceptable. For VoIP and video conferencing, aim for under 150ms. Real-time gaming requires under 50ms. Internal network latency should typically be under 10ms. Establish baselines for your specific environment and set thresholds accordingly.

How often should I test network latency?

For troubleshooting, test continuously until you identify the issue. For proactive management, implement automated monitoring that checks latency every 60 seconds. Review trends weekly and investigate any deviations from your baseline.

Can high CPU usage cause network latency?

Yes. When routers, switches, or firewalls experience high CPU utilization, they can't process packets quickly, introducing latency. Monitor CPU and memory on your network devices alongside latency metrics to identify these correlations.

What's the difference between latency and bandwidth?

Latency is the time it takes for data to travel between points (measured in milliseconds). Bandwidth is the amount of data that can travel in a given time (measured in Mbps or Gbps). Think of latency as the speed limit on a highway and bandwidth as the number of lanes. Both affect performance, but in different ways.

How does jitter relate to latency?

Jitter is the variation in latency over time. If your latency is consistently 20ms, you have zero jitter. If it varies between 15ms and 45ms, you have high jitter. Jitter is particularly problematic for real-time applications because it causes inconsistent performance even when average latency is acceptable.

Tools and Resources for Network Latency Monitoring

Command-Line Tools (Free)

- Ping: Built into all operating systems

- Traceroute/tracert: Built into all operating systems

- MTR: Available for Linux and macOS (install via package manager)

- iperf3: Cross-platform bandwidth and latency testing

- Wireshark: Advanced packet analysis (free, open-source)

Network Monitoring Platforms

PRTG Network Monitor provides comprehensive latency monitoring with:

- Automated ping sensors for continuous RTT measurement

- QoS monitoring for VoIP and video applications

- Flow monitoring to correlate latency with traffic patterns

- Customizable alerts and dashboards

- Support for hybrid environments (on-premises and cloud)

Learn more about PRTG's latency monitoring capabilities

When to Use Each Tool

Use ping when: You need a quick latency check or want to verify connectivity.

Use traceroute when: You need to identify where latency is occurring along the network path.

Use MTR when: You're troubleshooting intermittent latency issues that don't appear in single tests.

Use packet capture when: You need the most accurate application-level latency measurements.

Use monitoring platforms when: You need continuous visibility, historical trends, and proactive alerts across your entire network infrastructure.

Next Steps: From Measurement to Management

Now you know how to measure network latency using multiple methods. Here's your action plan:

Immediate actions (today):

- Run ping tests to your router, key servers, and external sites to establish current latency

- Use traceroute to map your network paths and identify potential bottlenecks

- Document your findings as your initial baseline

This week:

- Set up continuous latency monitoring for critical network paths

- Configure alerts for latency thresholds based on your application requirements

- Review latency trends to identify patterns

Ongoing:

- Monitor latency trends weekly and investigate anomalies

- Update baselines quarterly as your network evolves

- Correlate latency with other metrics (bandwidth, CPU, packet loss) to identify root causes

The difference between reactive and proactive network management is continuous measurement. Don't wait for users to complain about slow performance. Start monitoring latency now, and you'll catch issues before they impact your business.

Ready to implement automated latency monitoring? Explore PRTG's network monitoring capabilities and see how continuous visibility transforms network management.

Published by

Published by