Here we go again. Your database is slow, users are experiencing lag, and your application is crawling. Sound familiar? Performance issues tend to creep up on even the best-tuned databases. Slow response times are usually the first indicator that something's not quite right, which puts you in on the back foot straight away.

Here's the good news - database performance tuning doesn't require a radical transformation. In fact, most issues actually boil down to a few common culprits: slow queries, missing indexes, insufficient caching, or simply not monitoring the right things. The principles of performance tuning and optimization apply equally to MySQL, PostgreSQL, SQL Server, and Oracle - it's all the same principle. Let's explore practical steps for making your database queries faster and keeping it humming along at all times.

Diagnosing Database Performance Problems

The first step to optimizing anything is understanding where the problem areas are. Performance issues with databases are most often related to slow queries, CPU usage, memory limitations, or disk I/O bottlenecks. Identifying and understanding these performance metrics is essential for database tuning to even be possible in the first place. After all, each of these bottlenecks has a direct impact on response time.

Slow queries are the performance problem that is most visible to end users. Queries that take seconds instead of milliseconds to complete make for a sluggish application. Optimizing these queries is the single most impactful thing you can do to improve the speed and scalability of your database. Queries with missing indexes, bad joins, or large amounts of data fetches tend to suffer from poor data access patterns that affect performance and speed. Memory limitations, on the other hand, are generally observed as an inability for the database to cache in the buffer pool data that is frequently accessed leading to repeated disk reads.

The key here is to know which of the above bottlenecks you're dealing with before you start making changes. Real-time database monitoring provides a dashboard with performance metrics relating to query execution times, CPU utilization, memory, and disk activity. With this information at your fingertips, troubleshooting performance problems becomes way easier. Armed with bottleneck knowledge, you can then target query optimization techniques and specific measures that will affect database performance optimization.

Query Optimization: Write Smarter SQL

Writing better SQL is the fastest way to accelerate your databases. Every DBMS has a way to find out which queries are taking too long to run – use them! Once you've identified the queries that are potential bottlenecks, you can work on improving them.

First up, try not to retrieve more data than you need. It can be tempting to SELECT * FROM somebigtable and sort out the rows you want later in the application, but this almost never scales well. Be as specific as possible about the columns and rows you need, and if you only need 20 rows, LIMIT the query to 20 instead of making the DBMS retrieve 10,000 and then throw most of them away.

Almost all DBMSs can provide an "execution plan" for a query, telling you how it is actually processed by the database. This is often the easiest way to optimize queries. If it shows that a table is being scanned for only a few rows, add an index – it should make a noticeable difference.

Nested queries often look very impressive but they can be horrible for performance. For instance, a query that runs sub-queries for each row of your result set will typically be very slow. Aim for simple queries, and where possible. Finally, whenever you try an optimization, test it! Run the query a few times before making changes and a few times after, and check the execution time. Sometimes a change you make will actually make things worse…

The good thing about optimizing queries is that small changes often make a big difference. A poorly written query that takes 30 seconds to run should often be able to run in less than 1 second after some optimization.

Indexing: The Intelligent Way to Optimize Data Access Patterns

Indexes are a lot like the index at the back of a book. If a book doesn't have one, you have to start at page 1 and flip through until you find the information you want. If it does, you jump directly to the appropriate page. Database indexes are similar. By providing an alternative path to the data you want, they allow the database engine to avoid scanning all rows of a table or view. The tradeoff is that indexes consume storage space and can degrade write performance. Used wisely, indexes are an essential part of any performant database. Used unwisely, they can cause your database to bloat and become a bottleneck in your application.

Here are a few key takeaways about indexing:

Index the columns you query most frequently. This may seem obvious, but if you filter, sort or join against certain columns more than others, those are good candidates for indexing. Most database profiling tools will tell you which queries are executed most frequently.

Don't over-index. It's tempting to want to index everything, but more indexes means more disk space used and more overhead on insert and update operations. Index only the queries that are most important for your users.

Think about the bigger pciture. Indexing a column with few possible values is often a waste of time (a Boolean column, for example). The database will still be required to scan a large percentage of your data.

Revisit your indexes regularly. The way your application accesses data changes as your codebase grows and changes. An index you built two years ago may now serve no purpose other than wasting space.

The great thing about smart indexing is that it's also the lowest-hanging fruit when it comes to performance optimization.

Intelligent Caching

Caching stores frequently accessed data in memory, reducing the number of hits your database server receives. Rather than querying the database every time, your application first checks the cache. Caching has the added benefit of improving response times and overall scalability. You can use this effectively for any data that doesn't change often, which can improve high concurrency situations.

Redis can be used to create a cache layer in front of your database. It's blazingly fast, works with many data structures, and can handle high-performance database workloads, even with excellent concurrency. You can use this to cache entire query results, individual rows, or even computed aggregations. Caching can significantly reduce database load for read-heavy applications.

The buffer pool in your database is effectively a caching layer that holds frequently accessed data pages in memory. Optimizing buffer pool size is one of the fastest ways to improve database efficiency. Ensure the database server has enough memory available for the buffer pool to handle your average workload and dataset size.

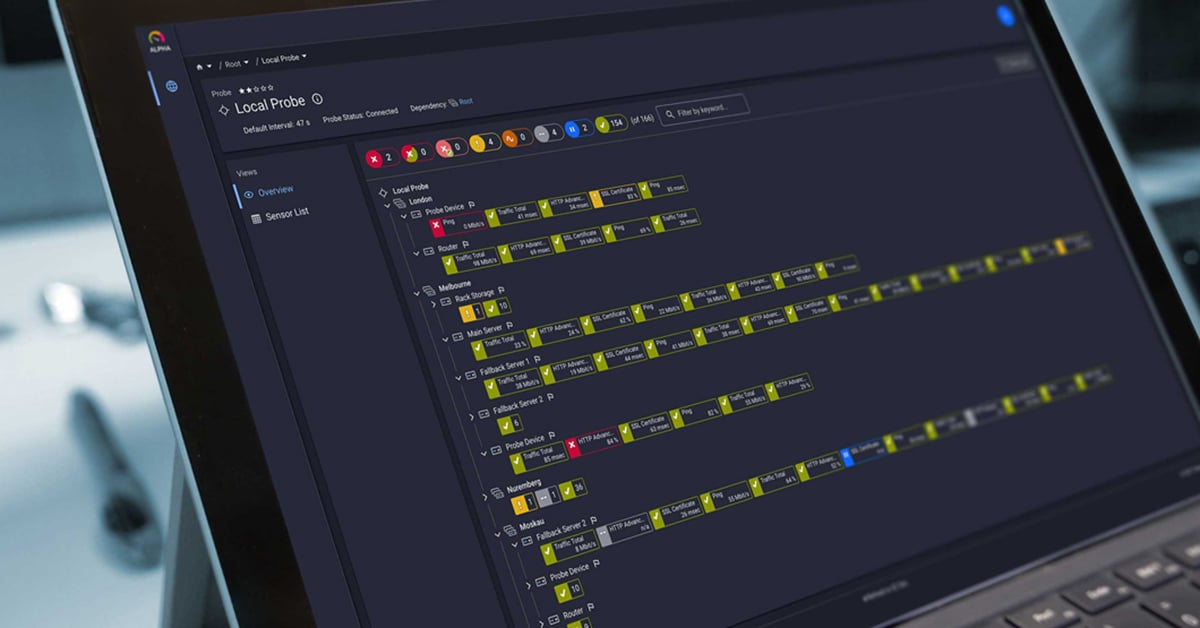

Database Monitoring with PRTG

You can't optimize what you can't see. Database monitoring shines a light on exactly where performance problems reside. PRTG Network Monitor has specialized sensors for all major database platforms to let you monitor query execution times, connection counts, CPU load, and memory usage in real time.

MySQL v2 Sensor: This sensor can monitor MySQL database systems and allows the execution of custom SQL queries. Use it to track query execution times, connection counts, and any database-specific metrics you care about. Perfect for pinpointing slow queries and tracking the effects of your optimization efforts. Use the MySQL v2 Sensor to automate monitoring of specific tables, functions, and stored procedures.

PostgreSQL Sensor: As the name suggests, this sensor is optimized for use with PostgreSQL databases. The PostgreSQL Sensor allows you to execute custom SQL queries to track table sizes, index usage, cache hit ratios, and transaction rates. Identify performance degradation before it impacts users and analyze VARCHAR column usage and schema design choices.

Oracle SQL v2 Sensor: Optimized for Oracle databases, this sensor also supports the execution of custom SQL queries. Monitor tablespace usage, session counts, and query performance across large data sets. Compatible with Oracle versions 10.2 and higher, the Oracle SQL v2 Sensor can also help you spot database partitioning opportunities.

ADO SQL v2 Sensor: The all-in-one database monitoring solution, the ADO SQL v2 sensor uses ODBC or OLE DB to connect to any database. Perfect for mixed database environments where you're running Microsoft SQL Server, MySQL, and more.

Best of all, these sensors can become part of your optimization workflow. Make a change to a database index or tune some queries, then watch the real-time metrics to see if your performance actually improved. Automate alerts for specific thresholds and keep a historical record of your optimization efforts over time. No more guesswork around whether your efforts to optimize are having a positive effect or not.

Extend Your Monitoring with PRTG Database Observer

If your organization runs enterprise databases like SAP HANA, SAP MaxDB, or IBM DB2, PRTG Database Observer extends the reach of your monitoring even further.

PRTG Database Observer is an extension for PRTG, and comes preconfigured with SQL queries specifically for these enterprise systems. Monitor SAP HANA memory consumption, track IBM DB2 buffer pool utilization, or keep an eye on SAP MaxDB transaction logs without having to write complex SQL queries from scratch. If the prebuilt queries aren't quite what you're looking for, you can create and test custom SQL queries specific to your monitoring needs and schema design choices.

This can be especially helpful for troubleshooting performance issues in production SAP systems. Rather than reacting to complaints about sluggish response and poor user experience, you'll see memory pressure increasing or transaction log growth heading in the wrong direction, giving you time to react before things get out of hand. Monitor CPU usage and assess whether sharding or partitioning could be a good solution. Analyze your schema design and determine how normalized your database is.

Real-World Use Cases

Let's now consider a few practical database performance scenarios.

Your online shop application experiences a slowdown during peak hours. You open your PRTG dashboard and notice a spike in MySQL query execution times. The MySQL v2 sensor pinpoints a particular SQL query against the orders table that is taking 8 seconds to complete, where it used to take 200 milliseconds. You pull up the execution plan and identify the culprit is missing composite index that spans order_date and customer_email. You add the index to optimize the data lookup, and within a few minutes, your sensor shows that query times have returned to normal with a corresponding improvement in response times.

Another scenario: a financial services firm runs a PostgreSQL data warehouse with millions of transaction records, which is a significant amount of data. Aggregation jobs that used to complete in an hour now take three hours to run. The PRTG PostgreSQL sensor reveals increasing memory usage and declining cache hit ratios. The solution here involves partitioning the transactions table by month and increasing the buffer pool allocation to better accommodate the increased workload. The sensor confirms that aggregation jobs are again completing in less than an hour with greatly improved scalability.

FAQ

How can I tell if my database needs more memory or simply better queries?

Look at your buffer pool hit ratio. A value below 90% means the database is always reading from disk instead of serving data from memory. This indicates your database server requires more RAM. But if the hit ratio is high and queries are still slow, the problem is probably inefficient SQL statements or missing indexes affecting data access patterns.

Do I need to optimize for read performance or write performance?

It depends on your workload and use cases. Most applications are read-heavy, so read optimization makes more sense: more indexes, caching, schema denormalization. For write-heavy workloads, fewer indexes and batch inserts reduce the overhead of write operations. Understand read-to-write ratio, concurrency requirements before deciding on optimizations.

What's the difference between database-level caching and application-level caching?

Database-level caching occurs automatically through the buffer pool and managed by the database engine. Application-level caching using something like Redis gives you more control over what is cached and for how long. Most high-performance systems use both layers for maximum efficiency.

Database Optimization Made Easy

It's not rocket science, but database optimization does take attention to detail and an organized approach. Identify your bottlenecks via real-time monitoring and address each one, in turn, by applying the standard optimization techniques. Whether you're suffering from slow queries, excessive CPU utilization, or memory pressure, the combination of smart indexing, query optimization, effective caching, and the right partitioning and/or sharding strategies will get you 90% of the way. And, of course: you can't optimize database performance (or individual queries) if you can't measure and automate your monitoring.

Want to see how PRTG can help you keep your databases humming along? Start your free 30-day trial and enjoy full access to all database sensors, with no limitations.

Published by

Published by