Looking back on the last decade of Information Technology, it seems that, in a lot of ways, the 2010s was a period of consolidation and delivering on all the flashy and exciting new tech promised in the early 2000s. Technology that was either far-fetched or ridiculously expensive – and in many cases, both – became more accessible and affordable in the 2010s. Faster processing speeds, disproportionate increments in network speeds, and more affordable mini-computers brought about all-encompassing changes in the IT world. And, of course, all these changes drastically altered the daily life of system administrators. So let's take a look at some of them!

Bandwidth speeds open the doors to everything else

The factor that had one of the biggest impacts – if not the biggest impact – was increasing bandwidth speeds. Almost everything else on this list was either boosted, made accessible, or even made possible by increasing data communication speeds. And the increase was profound!

The average fixed broadband download speed in the United States at the beginning of 2010 was just 7 Mbps. Now, at the turn of the decade, the average US Internet broadband download speed is hovering around 124 Mbps.

This increase in speed has made so many technologies of the past decade possible. Cloud computing wouldn’t make sense if data couldn’t be transmitted to and from remote servers fast enough. The IoT couldn’t have crossed into consumer households if Internet connections were slow. If you’re accessing web services online, you need the reaction times to be as fast as if you were running the application locally. It’s safe to say that faster and more affordable bandwidth has brought about massive changes in the system administrator’s day-to-day life through the technology it has enabled.

Up in the cloud

While cloud computing adoption truly began in the mid-2000s when Amazon launched Amazon Web Services, it was only in the next decade that it matured. Back in 2010, there was a lot of hype around cloud, and it was still unclear just how to best make use of what it offered. As the years progressed, the picture slowly became clearer and we saw more and more companies moving at least parts of their infrastructure into the cloud.

What this effectively meant for IT professionals was that they were managing less physical infrastructure on-premises and more services in the cloud. By the mid-2010s, even data warehouses were being hosted in the cloud (using services like AWS’s Redshift). Instead of having to set up hardware, install software and wire cables, administrators had to understand concepts like online instances, elastic storage, and other cloud-specific technology.

If you’d believed the hype back in around 2013, you could not be blamed for thinking that in a few short years, just about everything would be in the cloud. However, as cloud computing has entered the Slope of Enlightenment on Gartner’s Hype Lifecycle in the latter part of the decade, the reality is that IT professionals are now increasingly faced with hybrid environments that incorporate everything from physical hardware, cloud services, and containers. Getting all of these elements integrated is a challenge in itself.

The IoT changes IT infrastructure

Another technology that went from fantastical concept to a reality in the last decade is the Internet of Things. While you might argue that it is still very firmly in the “hype” phase, it has already shown its value in everything from smart cities through to Industry 4.0.

The IoT’s emergence in this decade has been due to the convergence of various factors: communication protocols like MQTT (which became an ISO standard in 2016) were easy to use and effective at connecting devices. Meanwhile, hardware technology allowed for more processing power on smaller chips, and better battery usage. Finally, the rise of microcomputers began when the first Raspberry Pi was released in 2011, and this allowed for more application of logic and control over smart devices.

What this has meant for many system administrators is that they now have a whole set of new devices and protocols to look after on top of the traditional IT elements in their environments. Think of companies who have sensors monitoring temperature and humidity, or sensors that monitor the location of a fleet of vehicles using geo-location trackers. These devices, their implementation and integration into existing environments, and their protocols have all introduced a new dimension for administrators to understand. And that is not even mentioning the security worries that IoT devices bring, too.

Security becomes a big deal

Speaking of security, this decade security issues became a large part of any system administrator’s work day. Although malware and other threats had been around since the 90s, their prevalence had been on the rise. In 2007, it was estimated that there were 5 million new malware samples produced every year. By the middle of the 2010s, that figure was at 500,000 new malware samples every day.

This meant that system administrators were spending more time than ever on hardening systems, securing data, educating users, and updating firmware and software versions to make sure the latest security updates were included.

This was also the decade that saw some big security issues that had administrators spending days and even weeks fixing up their environments: Heartbleed, the Meltdown and Spectre vulnerabilities, and many others added to the daily to-do lists of system administrators.

Virtualization and SaaS change the admin’s daily job

In the first decade of the 21st Century, virtualization hit its stride as it changed the lives of admins: virtual machines could be used as workstations, training environments, test environments…anything. This continued well into the 2010s, but what changed in this decade was where the virtual systems were hosted. Before, virtual systems were run on on-premises hardware; now they could be hosted in the cloud. That meant even less hardware and software for admins to worry about.

But it was not just virtualization that changed the way system administrators worked with software and hardware. The rise of Software as a Service (SaaS) meant that many traditional administration tasks became redundant. Take Office 365, which launched in 2013, as an example. Before, each workstation had to have a version of Microsoft Office installed on it; now, with Office 365, the software was hosted elsewhere, and all users needed was a good browser and an Internet connection. In this decade, almost every bit of software from CRM systems to asset management systems were hosted remotely and the programs were provided as a service. This also meant a change from the traditional licensing model for paying for the software to a subscription model.

Mobile devices are the new norm

After the unprecedented success of the first iPhone in 2007 and the launch of the iPad in 2010, mobile devices were ubiquitous by 2015. This mobile revolution had multiple impacts on the system administrator. Firstly, many had to deal with these devices on their corporate networks as the BYOD (Bring Your Own Device) trend exploded. But, as many commentators have observed, this brought about its own bandwidth and security issues.

The end of the mobile admin

In the "old days" of system administration, IT professionals had to be all over the place. If a computer was slow, they'd have to go that computer and take a look. If they needed to install an update at a different site, the admin would have to physically travel there to do the installation (read about this and other IT support quirks from the past in this blog post by my colleague, Sascha). But nowadays, that's changed. Most work can be done using a remote connection to the computer (even if it's in the same building). And because much of the software that users need these days is provided by the cloud or external servers, admins don't even need to install much software anymore.

The result is that, whereas before, system administrators would be all over the place, now they can do most of the work from their own work stations. Remote sessions have essentially replaced the mobile admin :)

Infrastructure monitoring has changed but is still relevant

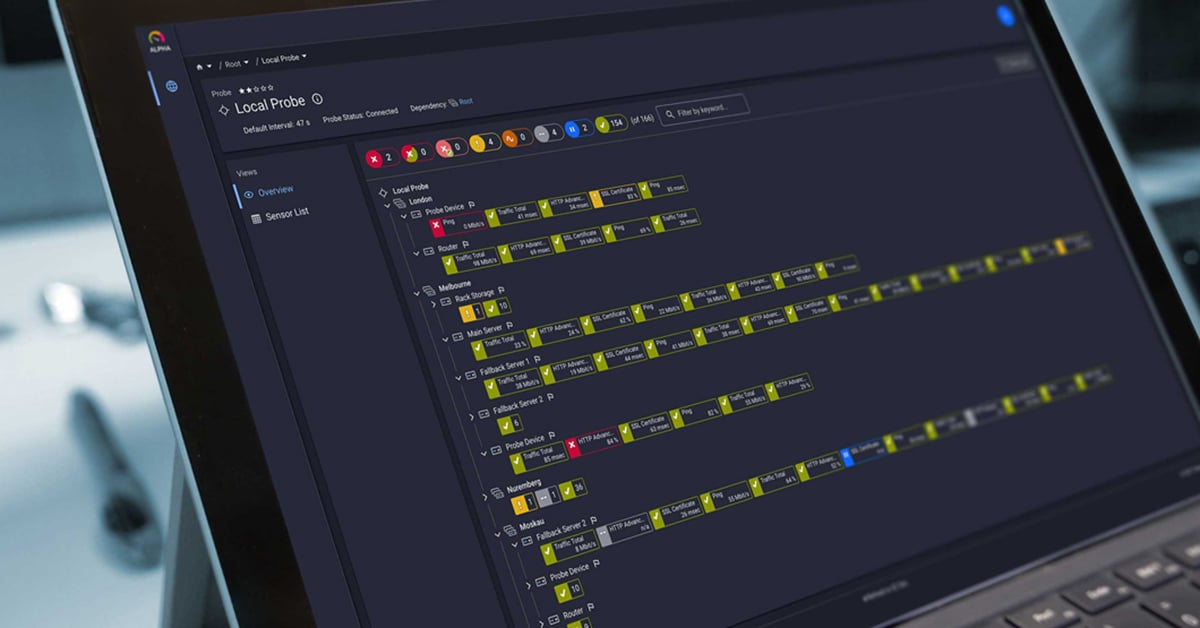

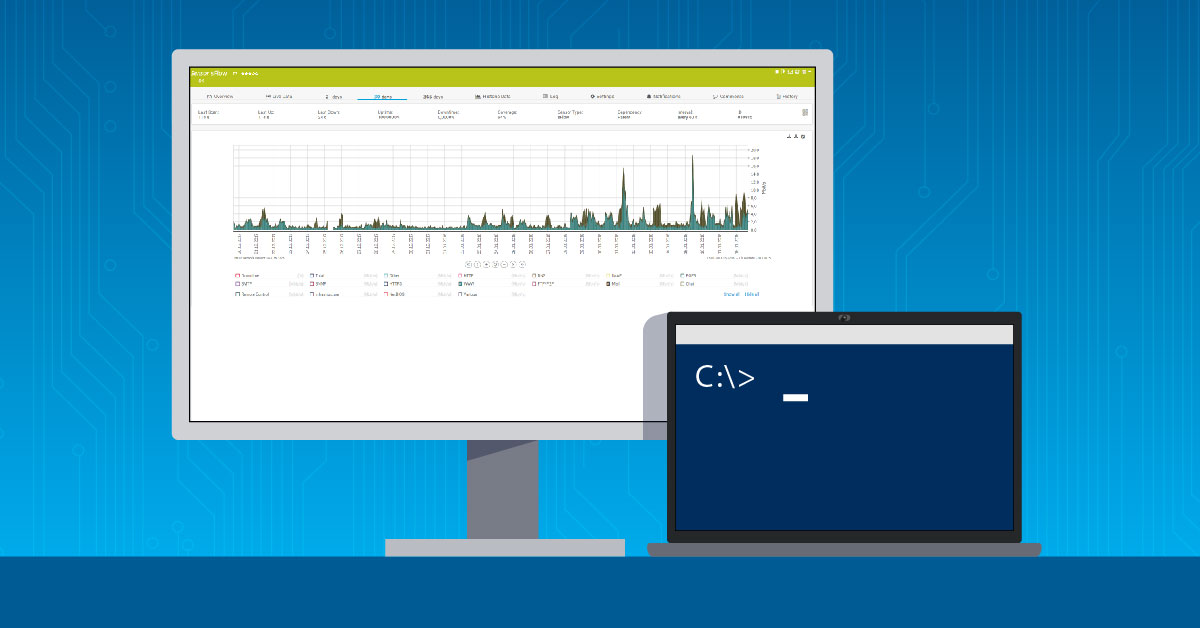

There have been many changes throughout the last decade, but one thing has remained constant: the need for infrastructure monitoring. In fact, if anything, it has become more important. But all of the other changes have also brought new challenges to monitoring modern IT.

As mentioned above, many environments are now hybrid and feature a combination of on-premises infrastructure and off-site cloud services. There are also many disparate hardware and protocols in the same environment: you now have a combination of "traditional" IT devices (like routers, workstations, servers, switches, and so on) and newer devices like IoT devices or specialized machines like we see on industrial floors or in healthcare IT, all in the same infrastructure. The challenge is to monitor all of these different services, hardware, and protocols in one place.

And of course I'd be remiss if I didn't bring up our own PRTG Network Monitor, which has been going strong not just for this decade, but the previous one too.

How did your daily life as an admin change in the 2010s? What changes did you feel the most? Let us know in the comments below!

Published by

Published by

.jpg)