Your monitoring dashboard pings. 2 PM Tuesday. Three different departments can't access the file server.

By the time you load your network map, half of them have already created tickets. The rest are gathered around your desk. Everyone wants to know the same thing: when will it be fixed?

If you've been network administrator for more than five minutes you know this scenario all too well. The easy part isn't addressing common failures like a dead switch or disconnected cable. It's troubleshooting the intermittent issues, the slow degradation of performance that takes weeks to detect, and the network problems that only happen under very specific circumstances nobody thought to document.

Systematic troubleshooting and good data can help you handle all of these.

Why Network Troubleshooting Can Be Hard

The easy problems announce themselves. A switch fails and an entire LAN segment goes offline. You swap it out and go to the next problem.

The hard problems are stealthy. Packet loss during business hours, latency spikes with traffic to specific applications, DNS timeouts that only occur at random. Troubleshooting issues like these require historical data, traffic analysis, and correlation across many systems.

Intermittent failures can't be reproduced on demand. Packet captures and alerts only happen after the fact. Without continuous monitoring and historical baselines for comparison, you're troubleshooting in the dark.

Asymmetric routing can cause traffic flows to work one direction but fail in the other, or use different paths with different network performance characteristics. Your basic ping tests succeed but applications timeout.

Bandwidth and performance problems first show as degraded application performance, higher latency, and packet loss during load. But identifying which traffic is consuming capacity, and whether that usage is legitimate or a problem caused by malware or other bad actors, is difficult.

Configuration drift occurs slowly. Over months and years your actual network configurations begin to diverge from your network diagram, making troubleshooting exponentially more difficult.

Quick Reference: Symptoms and Likely Causes

| Symptom | Likely Cause | First Diagnostic Step |

| Intermittent connectivity drops | Wi-Fi interference, failing ethernet hardware, or spanning tree reconvergence | Check interface errors and event logs for pattern timing |

| High latency but no packet loss | CPU overload on network devices or congested links | Review device CPU metrics and interface utilization |

| Packet loss during peak hours | Bandwidth saturation or buffer overflow | Analyze traffic flows to identify top consumers |

| DNS works via IP but fails by hostname | DNS server failure, incorrect client DNS settings, or cache poisoning | Test with nslookup against primary and secondary DNS servers |

| One direction works, other doesn't | Asymmetric routing or stateful firewall issues | Run traceroute in both directions and compare paths |

| Slow performance for specific applications | QoS misconfiguration, application server issues, or port blocking | Test application ports specifically and check QoS policies |

| Everything worked until recent change | Configuration error or incompatible firmware | Review change logs and consider rollback |

| No valid IP address assigned | DHCP server failure, IP address pool exhaustion, or DHCP relay issues | Check DHCP server logs and verify scope availability |

A Workflow for Systematic Network Troubleshooting

Approach every problem methodically with these troubleshooting steps:

Step 1: Define scope. Is the issue affecting a single user, a single subnet, the entire network? Understanding scope tells you where to focus your investigation.

Step 2: Establish baseline comparison. What does the affected network connection or service look like when it's working? Compare current latency, throughput, and error rates against known-good baselines.

Step 3: Test at each network layer. Start with physical connectivity (ethernet cables, ports), then data link, network, and transport layers following the OSI model. Can you ping by IP address? Does DNS resolve? Do specific TCP application ports respond? Successful tests eliminate potential causes.

Step 4: Identify what changed. The root cause of most common problems can be traced directly to recent changes. Check your change management logs for recent updates or configuration modifications.

Step 5: Analyze traffic patterns. Use flow data to see what's running on the network. High bandwidth usage from unexpected sources, unusual protocols, malware traffic patterns, or traffic to unfamiliar destinations all indicate root causes worth investigating.

Step 6: Check dependencies. A failing DNS server will affect web browsing. DHCP server downtime will prevent new network connections. Map the dependency chain to isolate where the problem originates from versus where symptoms appear.

Step 7: Correlate timing. When did the problem first occur? Do problem start times or duration correlate with other events like high CPU on network devices, high utilization or traffic spikes.

Building Better Diagnostic Capabilities

Capture historical performance data for key network paths, interface utilization, error rates, and latency. Without historical context you don't know when your monitoring metrics indicate a problem versus expected variance.

Use flow data for better visibility. NetFlow, sFlow, and IPFIX allow you detailed traffic analysis showing exactly what's using bandwidth, which applications, users, and destinations. PRTG users, take advantage of flow sensors to classify network traffic by protocol, source, and destination. During performance problems you can quickly determine if a compromised system is generating malware traffic, or if a legitimate application is consuming unexpected bandwidth.

Track interface errors. SNMP monitors for CRC errors, discards, and collisions. Rising error rates indicate failing hardware or a configuration issue that needs attention before it causes downtime.

Correlate events across systems. That latency spike on the WAN link might correlate with high CPU on your edge router or an issue at your ISP. The packet loss on VLAN 50 started when someone modified firewall rules. These connections between systems aren't obvious unless you have all the monitoring data consolidated.

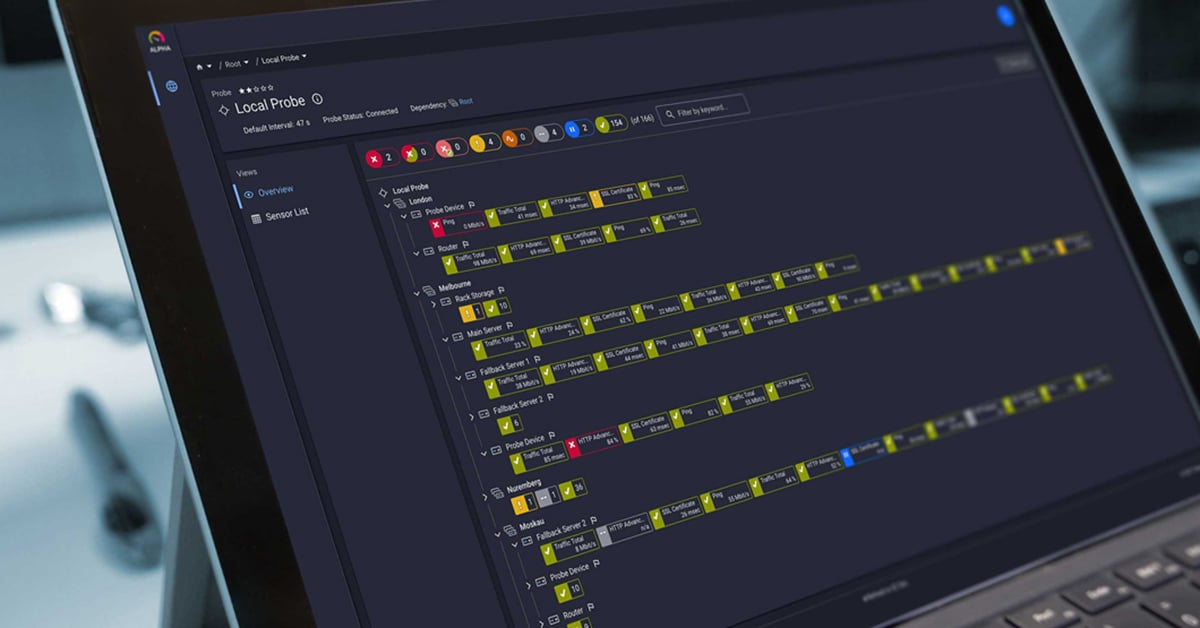

Document dependency relationships between systems. PRTG's dependency features suppress child alerts when parent devices are down to cut through the noise during outages.

Tackling Specific Scenarios

Intermittent connectivity issues require continuous monitoring. Set up QoS sensors that monitor latency, jitter, and packet loss. Historical graphs show whether issues occur at specific times of day, gradually get worse, or correlate with other events.

Bandwidth and performance problems require traffic classification. Flow analysis shows which applications are consuming bandwidth, letting you quickly distinguish between expected usage growth and a problem like malware or backup jobs running during business hours. PRTG users, flow sensors (NetFlow, sFlow, IPFIX) can break down traffic by application, protocol, source, and destination.

DNS problems masquerade as general network failures. But if you can ping by IP address while DNS queries time out, you've significantly narrowed the scope. Monitor DNS response times continuously.

VPN and remote access problems involve multiple potential failure points. When remote users complain about not connecting, check systematically: can they authenticate? Do they receive a valid IP address? Can they ping internal resources? Does DNS resolve internal names?

Using Network Troubleshooting Tools and Command Line Diagnostics

Basic network troubleshooting tools that are available at the command prompt include: ipconfig (Windows) to verify IP address configuration, ping to test basic connectivity, traceroute to map network paths, and nslookup for DNS queries. For deeper analysis at the protocol level, tcpdump (Linux) and similar packet capture tools are also available. These command line utilities are essential first steps.

Traffic analysis reveals root causes. Users will complain "the network is slow" but traffic analysis shows a file server is generating excessive multicast traffic or someone is saturating your ISP connection. Alert history provides timeline context: what failed first? Did latency increase before packet loss appeared?

Distributed environments often require remote probes to monitor each location independently. When troubleshooting multi-site issues you see which locations are affected and how network performance differs across segments, valuable for diagnosing WAN problems where each site has its own internet connection from various ISPs or service providers.

Advanced Techniques Worth Knowing

Packet capture supplements flow data for deep protocol analysis showing TCP sequence numbers, retransmissions, and protocol-specific errors.

SNMP traps provide event-driven alerts when network devices detect problems themselves. A switch detecting a link failure will send an SNMP trap immediately rather than waiting for your next polling interval.

Synthetic monitoring actively tests network paths and service functionality. Periodically query DNS servers to monitor response times. Monitor VPN connectivity from each remote site. These active tests identify service problems before users report them.

Common Questions

How do I identify the root cause when multiple components fail simultaneously?

Check dependencies and timing. In a cascade failure something always fails first. Alert timestamps tell you the sequence. If your edge router fails and DNS, web services, and VPN all become unreachable, the router is the root cause. Configure monitoring dependencies to suppress child alerts when parent devices are down.

What's the best way to diagnose asymmetric routing problems?

Test paths in both directions. Trace routes from source to destination and back. Compare latency, path, and packet loss in each direction. Monitoring tools that can test from multiple probe locations help identify asymmetric issues.

How can I tell if performance problems are network-related or application-related?

Isolate the layers with command line tools. If ping latency is low and packet loss is zero the network layer is probably fine. If application performance is poor despite good network metrics, problem is likely application-side. If network metrics show high latency or packet loss you've identified the layer to investigate.

Should I reboot network devices as a first troubleshooting step?

Only after gathering diagnostic data. Rebooting a device clears error counters, logs, and active state information. First collect interface statistics, error logs, and configuration data then reboot the device only if the logs show memory leaks, CPU exhaustion, or hung processes.

Make Troubleshooting Easier

Network troubleshooting will always require domain expertise and logical, systematic thinking. But the right monitoring data turns educated guessing into data-driven diagnosis.

Historical baselines, traffic analysis, and correlated event data dramatically reduce MTTR. You spend less time chasing symptoms and more time fixing root causes.

PRTG Network Monitor provides comprehensive network visibility with flow analysis, SNMP monitoring, IPv4 and IPv6 support, and custom sensors for your specific environment. Start a free 30-day trial with full feature access and unlimited sensors, see how better monitoring data can improve your troubleshooting process.

Published by

Published by