As a sysadmin, one of your greatest assets (that gets even better the more experience you have) is the ability to follow your hunches. Sometimes it’s a gut feeling about where a problem might lie. Or maybe it’s an idea for a solution that you can’t quite justify, but you just feel will work. In this sysadmin story, a hunch led to finding the root cause of a critical error that threatened to cost a construction materials company a lot of money.

You know how it goes when a problem happens: the blame game starts. If the cause of the problem is unclear, each department will try and move the blame to another. Maybe the IT team believes the vendors’ software is the problem, while the vendors believe that the problem lies in the operating system’s configuration. Until there is hard, indisputable evidence about what is causing the issue, there can be a lot of time-consuming back and forth between teams.

This was exactly the case for Matija, who worked as a sysadmin for a company producing construction materials. And in the end, a hunch helped Matija to not only stop the finger pointing, but also to come up with a workaround for the problem. But before we get into that, let’s take a step back in the story and understand what the problem was.

Automated loading processes

The company Matija worked for produced cement, and they had an automated loading process to load trucks up with cement. The area consisted of four lanes, so four trucks could be loaded at the same time.

How it worked: trucks come into the loading area, park in the designated zone, and the drivers place an RFID card into a reader. The automation process then starts and cement is loaded onto the truck. Scales underneath the truck measure how much of the product has been loaded onto the truck, and when a targeted weight is reached, the loading stops. The loading time is approximately 15 minutes, so every 15 minutes, a truck is loaded with a few thousand euros worth of cement.The automation process controlling the loading of the trucks was central to keep things running smoothly – and any issues could have costly results.

The problem

To keep up to date with the newest technologies, the company had recently updated the legacy hardware and software that it ran on. The project involved setting up new servers and new PLC and SCADA controllers and then connecting everything together. While external vendors were responsible for the new software, provisioning the new hardware and Operating System was the role of the IT department.

However, soon after the upgrade, the new automated loading system started to slow down. It gradually became slower and slower until it ground to a halt. After restarting the server, the problem seemed to be resolved. But this is when Matija had his first hunch: he believed the problem would occur again.

The blame game and finding the root cause

Matija spoke to the vendors who provided the software to describe the problem to them. As is often the case with these things, the conversation went back and forth. The response from the vendors responsible for the software was that perhaps it was an operating system configuration issue, or maybe an antivirus software scanning issue. Maybe it was the Security Information and Event Management system Matija had implemented causing the slowdowns and delays?

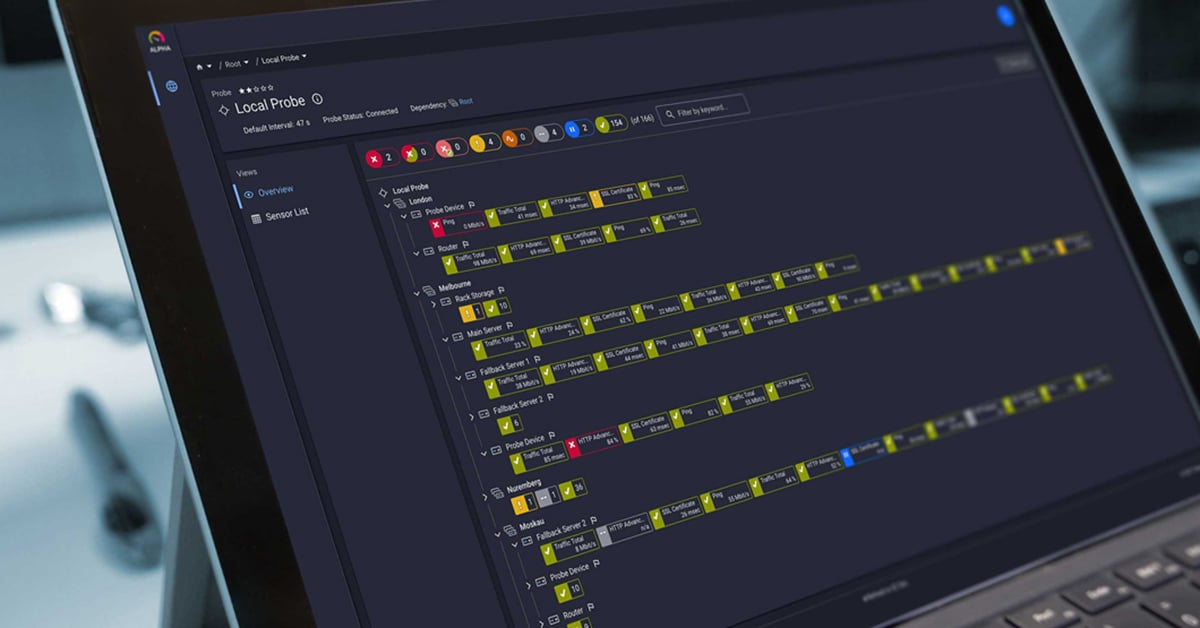

At this point, Matje had the second of his hunches: he didn’t think that anything mentioned by the software vendors was causing the problem. So he decided to use Paessler PRTG monitoring software to watch the system. He first identified the processes running on the Windows Server that were related to the automated loading system, and then he added sensors in PRTG to monitor them.

And so, when the problem reoccurred (just as Matija thought it would), he was able to check the performance counters. What he found was that the software solution was accumulating huge numbers of handles that were not being released. This caused a memory leak and eventually resulted in the system’s slowdown.

The workaround

With the knowledge that the vendor’s software was responsible for the build-up of handles, Matija could go back to the vendor to investigate more. As it turned out, the problem was not with the vendor’s code itself, but rather with a third-party component that the vendor used to implement the system. It was up to the vendor’s partner to patch their component – but in the meantime, Matija had to find a workaround for the issue. A server reboot every weekend would clear the handles and avoid costly downtime. Then during the week, PRTG could make sure an unexpected build-up of handles didn’t occur.

Follow your hunches

As Matija himself says: “Follow your hunches, no matter how strange they seem at the time, and no matter how many people around you try to convince you otherwise.” Who knows: if he hadn’t monitored the Windows Server processes and discovered the handles problem, maybe the arguments with the vendor would still be going on? What we do know for sure is that a hunch and some monitoring saved everyone time and money.

Have you been in a situation where you followed a hunch? We’d love to hear your stories – and even feature you in a future sysadmin story video and blog post! Let us know in the comments below!

Published by

Published by