At the Business of Software conference in Boston in October I hosted a workshop with the title "What do people do to keep their business online?" In this workshop I introduced the basic foundations of how we at Paessler run our business IT and what we do to make it failure tolerant. Those precautions are effectively what keeps our business online even as failures occur. And they will!

At the Business of Software conference in Boston in October I hosted a workshop with the title "What do people do to keep their business online?" In this workshop I introduced the basic foundations of how we at Paessler run our business IT and what we do to make it failure tolerant. Those precautions are effectively what keeps our business online even as failures occur. And they will!

In this series of blog articles I will share with you six steps that will help make your business failure tolerant. — See also: The Complete Series

Step 3: Set Up Redundancy and Auto-Healing for Your IT Infrastructure

If you made sure that your website is permanently online, you should also provide redundant systems for your office and back office IT.

Have Spare Parts Ready

For years we have been using n+1 redundancy on all our IT operations. n+1 means that we always purchase and run each piece of hardware at least twice. If we need one server to do something, we always put the same server right next to it so we are able to switch at any time.

This may sound crazy at the first glance, but it has many benefits:

- You don't have to wait for spare parts or on-site service if something breaks. You just take the spare-part from the second server or you move the service to the other server.

- You can use the redundant server for non-mission critical stuff, e.g. we use many of them for software testing.

- It may actually save you money: We have all our servers covered by DELL's on-site service. Because we have the time-critical redundancy already on-site we can settle for the much cheaper next-business-day service from DELL instead of 4-hours-on-site.

This makes even more sense when you combine this approach with virtualization which we use heavily at Paessler. For our daily operations we run several VMware clusters. Each set of hypervisors is always designed to allow for one full hypervisor node to go down without affecting our business at all. Also software upgrades of the hypervisors cause no downtime.

Redundancy and Auto-Healing for our Back Office IT

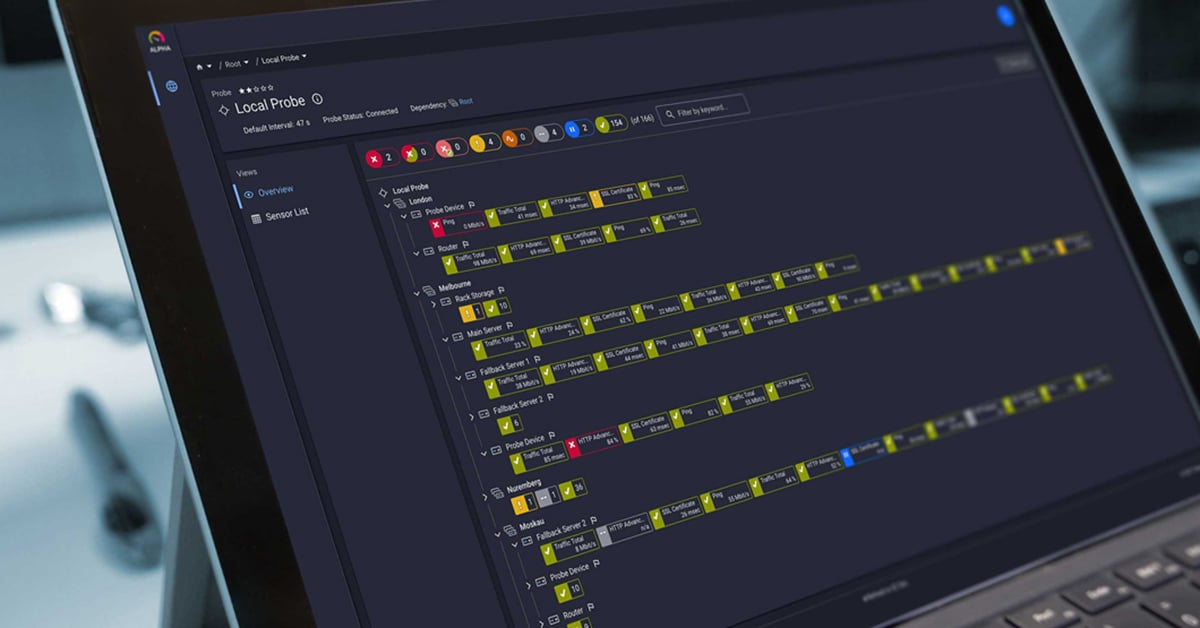

Our office is based in Nuremberg/Germany (blog post Paessler is Moving-Again!). Inside our office we have a data center with two racks of mostly non-critical software testing systems and our VoIP phone system along with one of our domain controllers. The rest of our IT-stuff, especially the mission critical systems, is packed into colocation racks in a professional data center.

Again we have applied redundancy in many ways:

- Our office has four Internet connections: Our major 1 Gbit/s fiber optic data line and two DSL lines from different providers as backups. The 4th layer is a 3G router that could still connect the office wirelessly to the Internet if all other data lines are lost. And the 5th option is to send all employees home and let them work from their home offices using our VPN.

- We have two ASA firewalls which connect us to the outside world and they run in "HA" mode (High Availability)

- All important servers that must be run on bare metal (e.g. 2 of our 4 domain controllers) are purchased in n+1 fashion.

- Everything else (everything!) is running on VMware servers. We have more than 100 virtual machines running in our office, about 1/3 of them are used for our business, the rest is the test bed for our monitoring software.

- We have two VMware clusters with 3 or more hypervisor nodes, each cluster has its own SAN (one from NetApp and one from DELL). This is the most costly part; SANs always seem start in the five digit range. But if you break it down to a usage time of 5 years the total monthly cost for the hardware of such a cluster is around US$900/month. If that is still too much, you can also run with "attached-storage", this means that the VMware servers store the data on their local disks, not on the SAN, which saves you US$600/month, but you sacrifice the VMotion feature (quickly moving virtual machines between hypervisors without shutting the virtual machine down)

Since all servers are virtualized the Auto-Healing is effectively performed by VMotion. As soon as one of the hypervisors should crash, all the virtual machines are moved to the remaining ones.

Here at Paessler most employees are not actually working on their personal PCs any more. When they log in in the morning they start a Remote Desktop session and connect to one of our Terminal Servers (which are again virtualized servers on one of our VMware clusters). They are effectively working on the terminal servers and do not store any data on their desktops. Most desktops are thin clients with Atom-CPU (which keeps down energy usage, too). And still everybody at Paessler has two 24-inch screens on his desk for a comfortable work environment. Even most of our developers are using code editors/compilers/IDEs on virtualized machines plus a second VM as permanent test system.

So the data we work with is always stored on our highly redundant hardware in a professional data center, we don't even bother with backups of the desktop PCs. If a desktop PC breaks we call DELL to fix it on the next business day. The employee simply moves over to another desk and opens his previous remote desktop session and keeps on working without losing anything. A nice side effect is that we also have the same work environment when we log into our VPN from our home offices.

In essence the Auto-Healing for office PCs means that you move over to a free desk in the office to continue working.

The next blog post will give insight into good backup solutions and disaster recovery plans.

The Complete Series

At Paessler we have been selling software online for 15 years and we have had hardware, software, and network failures just as everybody else. We tried to learn from each one of them and we tried to change our setup so that each failure would never happen again.

Read the other posts of this series:

- Step 1: Choose a Reliable Hosting Provider

- Step 2: Set Up Redundancy and Auto-Healing for Your Website

- Step 3: Set Up Redundancy and Auto-Healing for Your IT Infrastructure

- Step 4: Have Backup and Disaster Recovery Plans

- Step 5: Monitor Your Network

- Step 6: Know Your Costs

Published by

Published by